An Improvements of Deep Learner Based Human Activity Recognition with the Aid of Graph Convolution Features

Main Article Content

Abstract

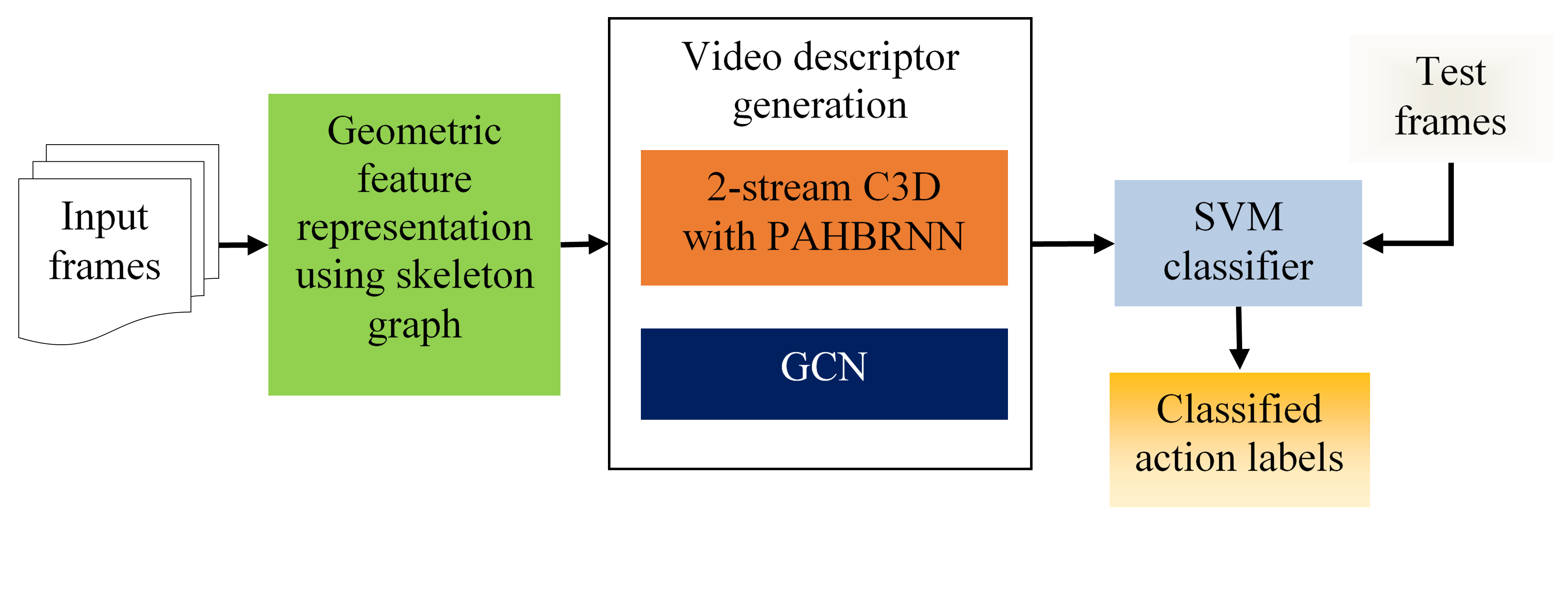

Many researchers are now focusing on Human Action Recognition (HAR), which is based on various deep-learning features related to body joints and their trajectories from videos. Among many schemes, Joints and Trajectory-pooled 3D-Deep Geometric Positional Attention-based Hierarchical Bidirectional Recurrent convolutional Descriptors (JTDGPAHBRD) can provide a video descriptor by learning geometric features and trajectories of the body joints. But the spatial-temporal dynamics of the different geometric features of the skeleton structure were not explored deeper. To solve this problem, this article develops the Graph Convolutional Network (GCN) in addition to the JTDGPAHBRD to create a video descriptor for HAR. The GCN can obtain complementary information, such as higher-level spatial-temporal features, between consecutive frames for enhancing end-to-end learning. In addition, to improve feature representation ability, a search space with several adaptive graph components is created. Then, a sampling and computation-effective evolution scheme are applied to explore this space. Moreover, the resultant GCN provides the temporal dynamics of the skeleton pattern, which are fused with the geometric features of the skeleton body joints and trajectory coordinates from the JTDGPAHBRD to create a more effective video descriptor for HAR. Finally, extensive experiments show that the JTDGPAHBRD-GCN model outperforms the existing HAR models on the Penn Action Dataset (PAD).

Article Details

References

M. H. Arshad, M. Bilal and A. Gani, “Human activity recognition: review”, taxonomy and open challenges. Sensors, vol. 22, no. 17, pp. 1-33, 2022.

M. G. Morshed, T. Sultana, A. Alam and Y. K. Lee, “Human action recognition: a taxonomy-based survey, updates, and opportunities”, Sensors, vol. 23, no.4, pp. 1-40, 2023.

R. Singh, A. K. S. Kushwaha and R. Srivastava, “Recent trends in human activity recognition–a comparative study”, Cognitive Systems Research, vol. 77, pp. 30-44, 2023.

A. Hussain, T. Hussain, W. Ullah and S. W. Baik, “Vision transformer and deep sequence learning for human activity recognition in surveillance videos”, Computational Intelligence and Neuroscience, vol. 1-10, 2022.

M. A. Khan, K. Javed, S. A. Khan, T. Saba, U. Habib, J. A. Khan and A. A. Abbasi, “Human action recognition using fusion of multiview and deep features: an application to video surveillance”, Multimedia Tools and Applications, pp. 1-27, 2020.

S. Zhang, Y. Li, S. Zhang, F. Shahabi, S. Xia, Y. Deng and N. Alshurafa, “Deep learning in human activity recognition with wearable sensors: a review on advances”, Sensors, vol. 22, no. 4, pp. 1-43, 2022.

Z. Ma, “Human action recognition in smart cultural tourism based on fusion techniques of virtual reality and SOM neural network”, Computational Intelligence and Neuroscience, 2021, pp. 1-12.

N. Zhang, T. Qi and Y. Zhao, “Real-time learning and recognition of assembly activities based on virtual reality demonstration”, Sensors, vol. 21, no. 18, pp. 1-15, 2021.

Y. Ji, Y. Yang, F. Shen, H. T. Shen and X. Li, “A survey of human action analysis in HRI applications”, IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 7, pp. 2114-2128, 2019.

Tiwari, A. K. ., Mishra, P. K. ., & Pandey, S. . (2023). Optimize Energy Efficiency Through Base Station Switching and Resource Allocation For 5g Heterogeneous Networks. International Journal of Intelligent Systems and Applications in Engineering, 11(1s), 113–119. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2483

Y. Kong and Y. Fu, “Human action recognition and prediction: a survey”, International Journal of Computer Vision, vol. 130, no. 5, pp. 1366-1401, 2022.

Mr. Kaustubh Patil. (2013). Optimization of Classified Satellite Images using DWT and Fuzzy Logic. International Journal of New Practices in Management and Engineering, 2(02), 08 - 12. Retrieved from http://ijnpme.org/index.php/IJNPME/article/view/15

F. Kulsoom, S. Narejo, Z. Mehmood, H. N. Chaudhry, A. Butt and A. K. Bashir, “A review of machine learning-based human activity recognition for diverse applications”, Neural Computing and Applications, pp. 1-36, 2022.

S. Qiu, H. Zhao, N. Jiang, Z. Wang, L. Liu, Y. An and G. Fortino, “Multi-sensor information fusion based on machine learning for real applications in human activity recognition: state-of-the-art and research challenges”, Information Fusion, vol. 80, pp. 241-265, 2022.

M. Feng and J. Meunier, “Skeleton graph-neural-network-based human action recognition: a survey”, Sensors, vol. 22, no. 6, pp. 1-52, 2022.

Anna, G., Jansen, M., Anna, J., Wagner, A., & Fischer, A. Machine Learning Applications for Quality Assurance in Engineering Education. Kuwait Journal of Machine Learning, 1(1). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/109

C. Cao, Y. Zhang, C. Zhang and H. Lu, “Body joint guided 3-D deep convolutional descriptors for action recognition”, IEEE Transactions on Cybernetics, vol. 48, no. 3, pp. 1095-1108, 2018.

N. Srilakshmi and N. Radha, “Body joints and trajectory guided 3D deep convolutional descriptors for human activity identification”, International Journal of Innovative Technology and Exploring Engineering, vol. 8, pp. 1016-1021, 2019.

N. Srilakshmi and N. Radha, “Deep positional attention-based bidirectional RNN with 3D convolutional video descriptors for human action recognition”, In IOP Conference Series: Materials Science and Engineering, IOP Publishing, vol. 1022, no. 1, pp. 1-10, 2021.

Ali Ahmed, Machine Learning in Healthcare: Applications and Challenges , Machine Learning Applications Conference Proceedings, Vol 1 2021.

S. Nagarathinam and R. Narayanan, “Deep positional attention-based hierarchical bidirectional RNN with CNN-based video descriptors for human action recognition”, International Journal of Intelligent Engineering & Systems, vol. 15, no. 3, pp. 406-415, 2022.

N.Srilakshmi and N.Radha , “An Enhancement of Deep Positional Attention-Based Human Action Recognition by Using Geometric Positional Features”, Indian Journal of Science and Technology,vol. 16,no. 29,pp.2190-2197,2023.

Y. Wan, Z. Yu, Y. Wang and X. Li, “Action recognition based on two-stream convolutional networks with long-short-term spatiotemporal features”, IEEE Access, vol. 8, pp. 85284-85293, 2020.

Q. Li, W. Yang, X. Chen, T. Yuan and Y. Wang, “Temporal segment connection network for action recognition”, IEEE Access, vol. 8, pp. 179118-179127, 2020.

Z. Ren, Q. Zhang, J. Cheng, F. Hao and X. Gao, “Segment spatial-temporal representation and cooperative learning of convolution neural networks for multimodal-based action recognition”, Neurocomputing, vol. 433, pp. 142-153, 2021.

X. Hao, J. Li, Y. Guo, T. Jiang and M. Yu, “Hypergraph neural network for skeleton-based action recognition”, IEEE Transactions on Image Processing, vol. 30, pp. 2263-2275, 2021.

Singh, M. ., Angurala, D. M. ., & Bala, D. M. . (2020). Bone Tumour detection Using Feature Extraction with Classification by Deep Learning Techniques. Research Journal of Computer Systems and Engineering, 1(1), 23–27. Retrieved from https://technicaljournals.org/RJCSE/index.php/journal/article/view/21

M. Li, S. Chen, X. Chen, Y. Zhang, Y. Wang and Q. Tian, “Symbiotic graph neural networks for 3d skeleton-based human action recognition and motion prediction”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 6, pp. 3316-3333, 2021.

J. Cha, M. Saqlain, D. Kim, S. Lee, S. Lee and S. Baek, “Learning 3D skeletal representation from transformer for action recognition”, IEEE Access, vol. 10, pp. 67541-67550, 2022.

S. K. Yadav, K. Tiwari, H. M. Pandey and S. A. Akbar, “Skeleton-based human activity recognition using ConvLSTM and guided feature learning”, Soft Computing, vol. 26, no. 2, pp. 877-890, 2022.

H. Wu, X. Ma and Y. Li, “Spatiotemporal multimodal learning with 3D CNNs for video action recognition”, IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 3, pp. 1250-1261, 2022.

J. Cheng, Z. Ren, Q. Zhang, X. Gao and F. Hao, “Cross-modality compensation convolutional neural networks for RGB-D action recognition”, IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 3, pp. 1498-1509, 2022.