Integrating Temporal Fluctuations in Crop Growth with Stacked Bidirectional LSTM and 3D CNN Fusion for Enhanced Crop Yield Prediction

Main Article Content

Abstract

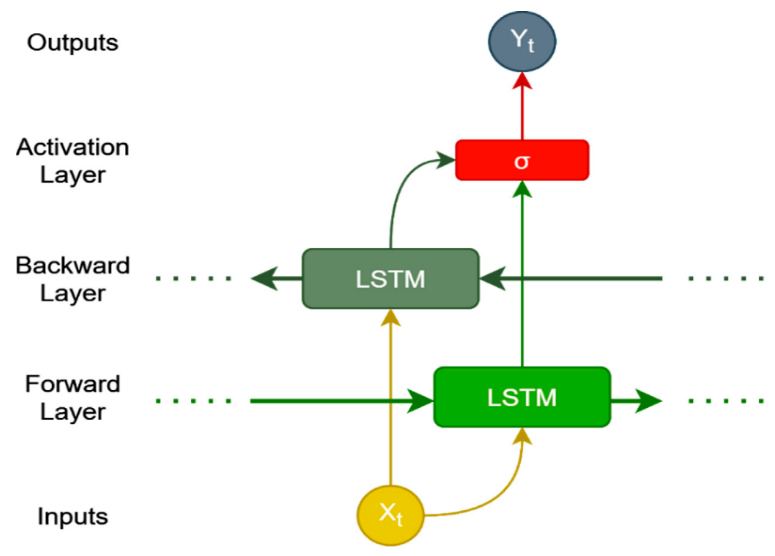

Optimizing farming methods and guaranteeing a steady supply of food depend critically on accurate predictions of crop yields. The dynamic temporal changes that occur during crop growth are generally ignored by conventional crop growth models, resulting in less precise projections. Using a stacked bidirectional Long Short-Term Memory (LSTM) structure and a 3D Convolutional Neural Network (CNN) fusion, we offer a novel neural network model that accounts for temporal oscillations in the crop growth process. The 3D CNN efficiently recovers spatial and temporal features from the crop development data, while the bidirectional LSTM cells capture the sequential dependencies and allow the model to learn from both past and future temporal information. Our model's prediction accuracy is improved by combining the LSTM and 3D CNN layers at the top, which better captures temporal and spatial patterns. We also provide a novel label-related loss function that is optimized for agricultural yield forecasting. Because of the relevance of temporal oscillations in crop development and the dynamic character of crop growth, a new loss function has been developed. This loss function encourages our model to learn and take advantage of the temporal trends, which improves our ability to estimate crop yield. We perform comprehensive experiments on real-world crop growth datasets to verify the efficacy of our suggested approach. The outcomes prove that our unified strategy performs far better than both baseline crop growth prediction algorithms and cutting-edge applications of deep learning. Improved crop yield prediction accuracy is achieved with the integration of temporal variations via the merging of bidirectional LSTM and 3D CNN and a unique loss function. This study helps move the science of estimating crop yields forward, which is important for informing agricultural policy and ensuring a steady supply of food.

Article Details

References

A. Mateo-Sanchis, J. E. Adsuara, M. Piles, J. Munoz-Marí, A. Perez-Suay and G. Camps-Valls, "Interpretable Long Short-Term Memory Networks for Crop Yield Estimation," in IEEE Geoscience and Remote Sensing Letters, vol. 20, pp. 1-5, 2023, Art no. 2501105, doi: 10.1109/LGRS.2023.3244064.

F. Ji, J. Meng, Z. Cheng, H. Fang and Y. Wang, "Crop Yield Estimation at Field Scales by Assimilating Time Series of Sentinel-2 Data Into a Modified CASA-WOFOST Coupled Model," in IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1-14, 2022, Art no. 4400914, doi: 10.1109/TGRS.2020.3047102.

H. Huang et al., "The Improved Winter Wheat Yield Estimation by Assimilating GLASS LAI Into a Crop Growth Model With the Proposed Bayesian Posterior-Based Ensemble Kalman Filter," in IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1-18, 2023, Art no. 4401818, doi: 10.1109/TGRS.2023.3259742.

X. Li, Y. Dong, Y. Zhu and W. Huang, "Enhanced Leaf Area Index Estimation With CROP-DualGAN Network," in IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1-10, 2023, Art no. 5514610, doi: 10.1109/TGRS.2022.3230354.

Z. Ramzan, H. M. S. Asif, I. Yousuf and M. Shahbaz, "A Multimodal Data Fusion and Deep Neural Networks Based Technique for Tea Yield Estimation in Pakistan Using Satellite Imagery," in IEEE Access, vol. 11, pp. 42578-42594, 2023, doi: 10.1109/ACCESS.2023.3271410.

Y. Zhang et al., "Enhanced Feature Extraction From Assimilated VTCI and LAI With a Particle Filter for Wheat Yield Estimation Using Cross-Wavelet Transform," in IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 16, pp. 5115-5127, 2023, doi: 10.1109/JSTARS.2023.3283240.

M. R. Khokher et al., "Early Yield Estimation in Viticulture Based on Grapevine Inflorescence Detection and Counting in Videos," in IEEE Access, vol. 11, pp. 37790-37808, 2023, doi: 10.1109/ACCESS.2023.3263238.

Zhu, W., Xiong, J., Li, H., & Cao, Y. (2018). Traffic Accident Prediction Based on 3D Convolutional Neural Networks. IEEE Transactions on Intelligent Transportation Systems, 19(10), 3202-3211.

Gu, J., Wang, Z., Kuen, J., Ma, L., Shahroudy, A., Shuai, B., & Wang, T. (2018). Recent Advances in Convolutional Neural Networks. Pattern Recognition, 77, 354-377.

Hara, K., Kataoka, H., & Satoh, Y. (2017). Learning Spatio-Temporal Features with 3D Residual Networks for Action Recognition. In ICCV.

Cao, Y., Xu, J., Lin, S., Wei, F., & Hu, H. (2019). GCNet: Non-local Networks Meet Squeeze-Excitation Networks and Beyond. In CVPR.

Jiang, H., Wang, J., Yuan, Z., Shen, X., & Zheng, N. (2019). S3D: Single Shot Multi-Span Detector via Fully 3D Convolutional Network. In CVPR.

Wang, X., Girshick, R., Gupta, A., & He, K. (2018). Non-local Neural Networks. In CVPR.

Singh, A., Gupta, A., & Davis, L. S. (2017). Online Real-time Multiple Spatiotemporal Action Localizations. In ICCV.

Fan, H., & Ling, H. (2017). End-to-End Learning of Convolutional Neural Networks for Face Verification. In CVPR.

Cao, Z., Simon, T., Wei, S. E., & Sheikh, Y. (2017). Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. In CVPR.

Carreira, J., & Zisserman, A. (2017). Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In CVPR.

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. (2020). A Simple Framework for Contrastive Learning of Visual Representations. In ICML.

Ji, P., Zhou, Q., Zhu, Y., Zhang, X., Li, H., & Zeng, W. (2020). 3D Convolutional Neural Networks for Efficient and Robust Hand Gesture Recognition against Misalignment and Imitation Attacks. Pattern Recognition, 101, 107216.

Chen, Y., Kalantidis, Y., Li, J., Yan, S., & Feng, J. (2019). Multi-fiber Networks for Video Recognition. In CVPR.

Li, X., Wang, P., Zhang, C., Zhang, J., Wang, X., & Ogunbona, P. (2018). Recurrent Squeeze-and-Excitation Context Aggregation Net for Single Image Deraining. In CVPR.

Yang, Z., Li, P., & Ma, K. K. (2019). Jointly Optimize Data Augmentation and Network Training: Adversarial Data Augmentation in Human Pose Estimation. In CVPR.

Ma, J., Shang, X., & Chang, S. F. (2020). Interpretable 3D Human Action Analysis with Temporal Convolutional Networks. In CVPR.