An Integrated Kernel PCA Neural Network and EGM for Number of Sources Estimation in Wireless Communication

Main Article Content

Abstract

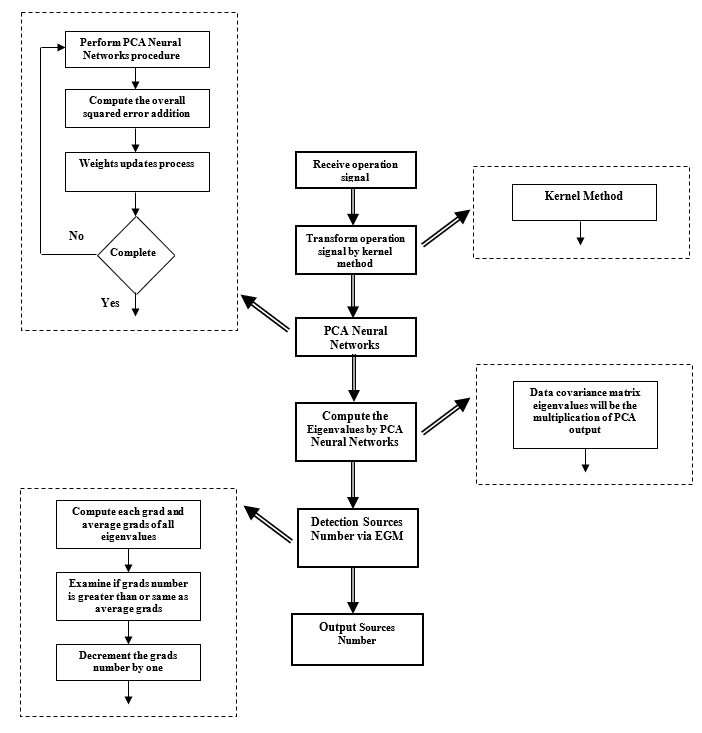

The present work argues estimating number of sources in communication system using an integrated model of Principal Component Analysis (PCA) neural network and kernel method to produce Eigenvalue Grads Method (EGM). The essential advantage of this new suggested model is that, PCA neural is used to determine the covariance matrix instead of the traditional computation process which is time consuming. Simulation outcomes of this adopted model demonstrate wonderful responses through effectiveness, fast converge speed for (PCA) neural network, as well as achieving correct number of sources.

Article Details

References

T. Salman, A. Badawy, T. M. Elfouly, A. Mohamed, and T. Khattab,"Estimating the number of sources: An efficient maximization approach," In 2015 International Wireless Communications and Mobile Computing Conference, pp. 199-204, IEEE, 2015, doi: 10.1109/IWCMC.2015.7289082.

J. Lu and Zhguo Zhng, “Using Eigenvalue Grads Method to Estimate the Number of Signal Source”, International Conference on Signal processing Proceeding 2000, pp. 223-22, 2000, doi: 10.1109/ICOSP.2000.894479

J. Qiu, H. Wang, J. Lu and B. Zhng, "Neural Network Implementations for PCA and Its Extensions", International Scholarly Research Notices Artificial Intelligence, 2012, doi: 10.5402/2012/847305

J. Ma, A. Wang, F. Lin, S. Wesarg, and M. Erdt, "A novel robust kernel principal component analysis for nonlinear statistical shape modeling from erroneous data", Computerized Medical Imaging and Graphics 77, Vol. 101638, 2019, doi: 10.1016/j.compmedimag.2019.05.006.

Q. Wang, "Kernel principal component analysis and its applications in face recognition and active shape models", arXiv preprint arXiv:1207.3538 , 2012, doi: 10.48550/arXiv.1207.3538.

C. Gallo and V. Capozzi, "Feature selection with non linear PCA: A neural network approach", Journal of Applied Mathematics and Physics, Vol. 7, No. 10, pp. 2537-2554, 2019, doi: 10.4236/jamp.2019.710173.

I. ?wietlicka, W. Kuniszyk and M. ?wietlicki, "Artificial neural networks combined with the principal component analysis for non-fluent speech recognition", Sensors, Vol. 22, No. 1, 321 ,2022, doi: 10.3390/s22010321.

Y. Zhang, and Y. Ma, "CGHA for principal component extraction in the complex domain", IEEE Transactions on Neural Networks, Vol. 8, No. 5, pp. 1031-1036, 1997, doi: 10.1109/72.623205

I. Han, A. Zandieh, J. Lee, R. Novak, L. Xiao, and A. Karbasi, "Fast neural kernel embeddings for general activations." Advances in neural information processing systems 35 (NeurIPS 2022) , pp. 35657-35671, 2022, doi: 10.48550/arXiv.2209.04121

M Schuld, "Supervised quantum machine learning models are kernel methods", arXiv preprint arXiv:2101.11020, 2021, doi: 10.48550/arXiv.2101.11020

A. Rao, Amara and N. Sarma, "Performance analysis of kernel based adaptive beamforming for smart antenna systems", In 2014 IEEE International Microwave and RF Conference (IMaRC), pp. 262-265. IEEE, 2014, doi: 10.1109/IMaRC.2014.7039023

Y. Liu, C. Sun, and S. Jiang, "A kernel least mean square algorithm based on randomized feature networks", Applied Sciences, Vol. 8, No. 3, 2018, doi: 10.3390/app8030458.

P. Pokharel, W. Liu, and J. Principe, "Kernel least mean square algorithm with constrained growth", Signal Processing,Vol. 89, No. 3, pp. 257-265, 2009, doi: 10.1016/j.sigpro.2008.08.009.

M Pokharel, Puskal P., Weifeng Liu, and Jose C. Principe, "Kernel lms", In 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP'07, Vol. 3, pp. III-1421, 2007, doi: 10.1109/ICASSP.2007.367113