A Novel Chaos Quasi-Oppositional based Flamingo Search Algorithm with Simulated Annealing for Feature Selection

Main Article Content

Abstract

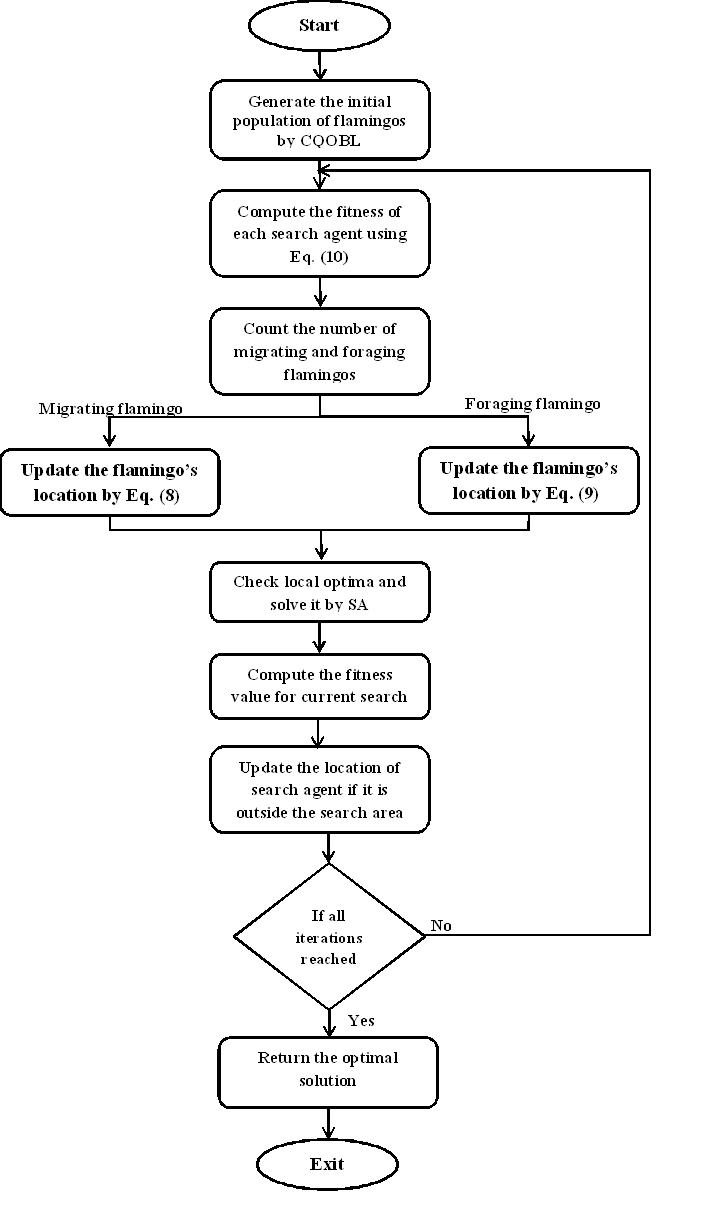

In present situations feature selection is one of the most vital tasks in machine learning. Diminishing the feature set helps to increase the accuracy of the classifier. Due to large number of information’s present in the dataset it is a tremendous process to select the necessary features from the dataset. So, to solve this problem a novel Chaos Quasi-Oppositional based Flamingo Search Algorithm with Simulated Annealing algorithm (CQOFSA-SA) is proposed for feature selection and to select the optimal feature set from the datasets and thus it shrinks the dimension of the dataset. The FSA approach is used to choose the optimal feature subset from the dataset. In each iteration, the optimal solution of FSA is enriched by Simulated Annealing (SA). TheChaos Quasi-Oppositional based learning (CQOBL) included in the initialization of FSA improves the convergence rate and increases the searching capability of the FSA approach in choosing the optimal feature set. From the experimental outcomes, it is proved that the proposed CQOFSA-SA outperforms other feature selection approaches in terms of accuracy, optimal reduced feature set, fast convergence and fitness value.

Article Details

References

PrachiAgrawal, Hattan F. Abutarboush, Talari Ganesh, and Ali Wagdy Mohamed, “Metaheuristic Algorithms on Feature Selection: A Survey of One Decade of Research (2009-2019)”, IEEE Access, Vol.9, 2021; doi: 10.1109/ACCESS.2021.3056407.

NaifAlmusallam et al., “Towards an Unsupervised Feature Selection Method for Effective Dynamic Features”, IEEE Access, vol. 9, pp. 77149 – 77163, 2021, doi: 10.1109/ACCESS.2021.3082755

Paniri, M., Dowlatshahi, M. B., &Nezamabadi-pour, H. (2019). MLACO: A multi-label feature selection algorithm based on ant colony optimization. Knowledge-Based Systems, 105285. doi:10.1016/j.knosys.2019.105285

M. Dowlatshahi, V. Derhami and H. Nezamabadi-pour, "A Novel Three-Stage FilterWrapper Framework for miRNA Subset Selection in Cancer Classification," Informatics, vol. 5, p. 13, 3 2018.

R. Wald, T. M. Khoshgoftaar, and A. Napolitano, ‘‘How the choice of wrapper learner and performance metric affects subset evaluation,’’ in Proc. IEEE 25th Int. Conf. Tools with Artif. Intell., Nov. 2013, pp. 426–432.

Jianbin Ma, XiaoyingGao, “A filter-based feature construction and feature selection approach for classification using Genetic Programming”, Knowledge-Based Systems, Vol. 196, 21 May 2020, 105806, https://doi.org/10.1016/j.knosys.2020.105806.

Qiao Yu, Shu-juan Jiang, Rong-cun Wang & Hong-yang Wang, “A feature selection approach based on a similarity measure for software defect prediction”, Frontiers of Information Technology & Electronic Engineering, vol. 18, pp.1744–1753, 2018.

Khaire, U. M., &Dhanalakshmi, R. (2019). Stability of feature selection algorithm: A review. Journal of King Saud University - Computer and Information Sciences. doi:10.1016/j.jksuci.2019.06.012

P.M. Diaz and M. Julie Emerald Jiju, “A Comparative Analysis of Meta-Heuristic Optimization Algorithms for Feature Selection and Feature Weighting in Neural Networks”, Evolutionary Intelligence, 2021, doi:10.1007/s12065-021-00634-6

Tuan Minh Le, Thanh Minh Vo, Tan Nhat Pham, Son Vu Truong Dao, “A Novel Wrapper–Based Feature Selection for Early Diabetes Prediction Enhanced With a Metaheuristic”, IEEE Access, Vol. 9, 7869 – 7884, 2020, DOI: 10.1109/ACCESS.2020.3047942.

Chaudhary, A. ., Sharma, A. ., & Gupta, N. . (2023). A Novel Approach to Blockchain and Deep Learning in the field of Steganography. International Journal of Intelligent Systems and Applications in Engineering, 11(2s), 104–115. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2514

Juan Laborda, and SeyongRyoo, “Feature Selection in a Credit Scoring Model”, Mathematics 2021, 9, 746, https://doi.org/10.3390/math9070746

Jain, I., Jain, V. K., & Jain, R. (2018). Correlation feature selection based improved-binary particle swarm optimization for gene selection and cancer classification. Applied Soft Computing, 62, 203-215.

Sharma, A., & Mishra, P. K. (2021). Performance analysis of machine learning based optimized feature selection approaches for breast cancer diagnosis. International Journal of Information Technology. doi:10.1007/s41870-021-00671-5

Dipanwita Thakur and Suparna Biswas (2021). Feature fusion using deep learning for smartphone based human activity recognition. International Journal of Information Technology. doi:10.1007/s41870-021-00719-6

Brian Moore, Peter Thomas, Giovanni Rossi, Anna Kowalska, Manuel López. Machine Learning for Decision Science in Energy and Sustainability. Kuwait Journal of Machine Learning, 2(4). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/220

Santosh Kumar Upadhyay and Avadhesh Kumar (2021). A novel approach for rice plant diseases classification with deep convolutional neural network. International Journal of Information Technology. doi: 10.1007/s41870-021-00817-5.

M. S Sheela and C. A. Arun (2022). Hybrid PSO–SVM algorithm for Covid-19 screening and quantification. International Journal of Information Technology. doi:10.1007%2Fs41870-021-00856-y

Li, Y., Han, M., & Guo, Q. (2020). Modified Whale Optimization Algorithm Based on Tent Chaotic Mapping and Its Application in Structural Optimization. KSCE Journal of Civil Engineering, 24(12), 3703–3713. doi:10.1007/s12205-020-0504-5

Raj, R., & Sahoo, D. S. S. . (2021). Detection of Botnet Using Deep Learning Architecture Using Chrome 23 Pattern with IOT. Research Journal of Computer Systems and Engineering, 2(2), 38:44. Retrieved from https://technicaljournals.org/RJCSE/index.php/journal/article/view/31

R. V. Rao and R. B. Pawar, “Quasi-oppositional-based Rao algorithms for multi-objective design optimization of selected heat sinks”, Journal of Computational Design and Engineering, 2020, 7(6), 830–863, doi: 10.1093/jcde/qwaa060.

Wang Zhiheng and Liu Jianhu, “Flamingo Search Algorithm: A New Swarm Intelligence Optimization Algorithm”, IEEE Access, VOLUME 9, 2021, 88564- 88582, 10.1109/ACCESS.2021.3090512.

Abdel-Basset, M., Ding, W., & El-Shahat, D. (2020). A hybrid Harris Hawks optimization algorithm with simulated annealing for feature selection. Artificial Intelligence Review, 54(1), 593–637. doi:10.1007/s10462-020-09860-3

GilseungAhn and Sun Hur, “Efficient Genetic Algorithm for Feature Selection for Early Time Series Classification”, Computers & Industrial Engineering (2020), doi: https://doi.org/10.1016/j.cie.2020.106345.

M. M. Mafarja and S. Mirjalili, ‘‘Hybrid whale optimization algorithm with simulated annealing for feature selection,’’ NeuroComput., vol. 260, pp. 302–312, Oct. 2017.

K Blagec, G Dorffner, M Moradi, M Samwald, “A critical analysis of metrics used for measuring progress in artificial intelligence”, arXiv, 2020.