Exploration of Deep Learning Models for Video Based Multiple Human Activity Recognition

Main Article Content

Abstract

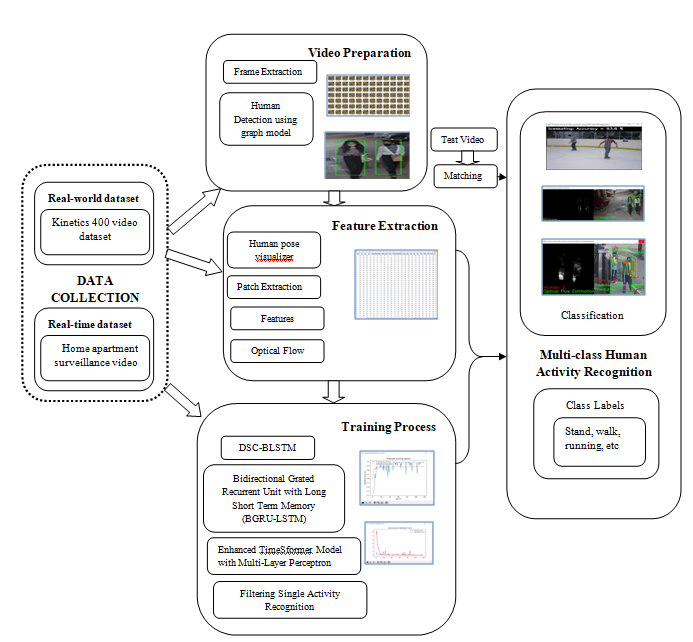

Human Activity Recognition (HAR) with Deep Learning is a challenging and a highly demanding classification task. Complexity of the activity detection and the number of subjects are the main issues. Data mining approaches improved decision-making performance. This work presents one such model for Human activity recognition for multiple subjects carrying out multiple activities. Involving real time datasets, the work developed a rapid algorithm for minimizing the problems of neural networks classifier. An optimal feature extraction happens and develops a multi-modal classification technique and predicts solutions with better accuracy when compared to other traditional methods. This paper discussing on HAR prediction in four phases namely (i) Depthwise Separable Convolution with BiLSTM (DSC-BLSTM); (ii) Enhanced Bidirectional Grated Recurrent Unit with Long Short Term Memory (BGRU-LSTM); (iii) Enhanced TimeSformer Model with Multi-Layer Perceptron Neural Networks classification and (iv) Filtering Single Activity Recognition are described.In comparison to previous efforts like the DSC-BLSTM and BGRU-LSTM classifications, the experimental result of the ETMLP classification attained 98.90%, which was more efficient. The end outcome revealed that the new model performed better in terms of accuracy than the other models.

Article Details

References

Anguita .D, Ghio .O, Oneto.L, Parra .X, Reyes-Ortiz .L, “Energy Efficient Smartphone-Based Activity Recognition Using Fixed-Point Arithmetic,” Journal of Universal Computer Science, vol. 19, no. 9, pp. 1295–1314, 2013.

Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Lu?ci´c, and Cordelia Schmid, “Vivit: A video vision transformer”. ICCV, 2021.

J. Schmidhuber, “Deep Learning In Neural Networks: An Overview”, Neural Networks, vol. 61, pp. 85-117, 2015.

N. Y. Hammerla, S. Halloran, and T. Ploetz, “Deep, Convolutional, And Recurrent Models For Human Activity Recognition Using Wearables,” arXiv preprint arXiv:1604.08880, 2016

Unnam, A. K. ., & Rao, B. S. . (2023). An Extended Clusters Assessment Method with the Multi-Viewpoints for Effective Visualization of Data Partitions. International Journal of Intelligent Systems and Applications in Engineering, 11(2s), 81–87. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2511

S. M¨unzner, P. Schmidt, A. Reiss, M. Hanselmann, R. Stiefelhagen, and R. D¨urichen, “Cnn-Based Sensor Fusion Techniques For Multimodal Human Activity Recognition,” in Proceedings of the 2017ACM International Symposium on Wearable Computers, ser. ISWC ’17. New York, NY, USA: ACM, 2017, pp. 158–165.

A. Jain and V. Kanhangad, “Human Activity Classification in Smartphone’s Using Accelerometer and Gyroscope Sensors,” IEEE Sensors Journal, vol. 18, no. 3, pp. 1169-1177, 1 Feb.1, 2018

Li, S., Seybold, B., Vorobyov, A., Lei, X., Jay Kuo, C.C.: Unsupervised video object segmentation with motion-based bilateral networks. In: ECCV. pp. 207-223, 2018

Sandler .M, Howard .A, Zhu .M, Zhmoginov .A, and Chen .L. C, “Mobilenetv2: Inverted Residuals And Linear bottlenecks.” IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp 4510–4520.

Francisco Javier Ordóñez, Daniel Roggen, “Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition”, Wearable Technologies, Sensor Technology Research Centre, Sensors, 2016.

N Srivastava, E Mansimov, R Salakhudinov,” Unsupervised Learning Of Video Representation Using LSTM”, International conference on machine learning, pp 843–852, 2015.

Aggarwal, J. K., and Ryoo, M. S., “Human activity analysis: a review”. ACM Computing Survey, 2011, pp.1–43.

A. Jain and V. Kanhangad, “Human Activity Classification in Smartphone’s Using Accelerometer and Gyroscope Sensors,” IEEE Sensors Journal, vol. 18, no. 3, pp. 1169-1177, 1 Feb.1, 2018.

Das Antar, Anindya & Ahmed, Masud & Ahad, Md Atiqur Rahman. (2019). Challenges in Sensor-based Human Activity Recognition and a Comparative Analysis of Benchmark Datasets: A Review. 134-139. 10.1109/ICIEV.2019.8858508.

AlbertFlorea and FilipWeilid, "Deep Learning Models for Human Activity Recognition", Book series, ComputerEngineering, 2019.

Nidhi, Dua., Shiva, Nand, Singh., Vijay, Bhaskar, Semwal. (2021). Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing, 103(7):1461-1478. doi: 10.1007/S00607-021-00928-8

Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Lu?ci´c, and Cordelia Schmid, “Vivit: A video vision transformer”. ICCV, 2021.

Jitha Janardhanan and S. Umamaheswari, “Vision based Human Activity Recognition using Deep Neural Network Framework” International Journal of Advanced Computer Science and Applications(IJACSA), 13(6), 2022.

Jitha Janardhanan and S. Umamaheswari, “Recognizing Multiple Human Activities Using Deep Learning Framework”, International Inforamtion and Engineering Technology Association (IIETA), Vol. 36, Iss. 5, (Oct 2022): 791-799. DOI:10.18280/ria.360518.

Jitha Janardhanan and S. Umamaheswari, “Multi-Class Human Activity Prediction using Deep Learning Algorithm”, Int J Intell Syst Appl Eng, vol. 10, no. 4, pp. 480–486, Dec. 2022.

Kay W, Carreira J, Simonyan K, Zhang B, Hillier C, Vijayanarasimhan S, Viola F, Green T, Back T, Natsev P, “The Kinetics Human Action Video Dataset”, 2017.

Yu Zhao, Rennong Yang, Guillaume Chevalier, Ximeng Xu, and Zhenxing Zhang, “Deep Residual Bidir-LSTM for Human Activity Recognition Using Wearable Sensors”, Mathematical Problems in Engineering, ,Volume 2018