Adaptive Resource Allocation in Cloud Data Centers using Actor-Critical Deep Reinforcement Learning for Optimized Load Balancing

Main Article Content

Abstract

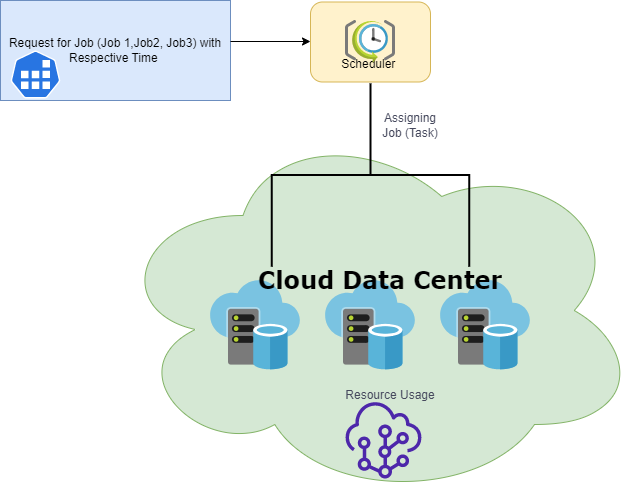

This paper proposes a deep reinforcement learning-based actor-critic method for efficient resource allocation in cloud computing. The proposed method uses an actor network to generate the allocation strategy and a critic network to evaluate the quality of the allocation. The actor and critic networks are trained using a deep reinforcement learning algorithm to optimize the allocation strategy. The proposed method is evaluated using a simulation-based experimental study, and the results show that it outperforms several existing allocation methods in terms of resource utilization, energy efficiency and overall cost. Some algorithms for managing workloads or virtual machines have been developed in previous works in an effort to reduce energy consumption; however, these solutions often fail to take into account the high dynamic nature of server states and are not implemented at a sufficiently enough scale. In order to guarantee the QoS of workloads while simultaneously lowering the computational energy consumption of physical servers, this study proposes the Actor Critic based Compute-Intensive Workload Allocation Scheme (AC-CIWAS). AC-CIWAS captures the dynamic feature of server states in a continuous manner, and considers the influence of different workloads on energy consumption, to accomplish logical task allocation. In order to determine how best to allocate workloads in terms of energy efficiency, AC-CIWAS uses a Deep Reinforcement Learning (DRL)-based Actor Critic (AC) algorithm to calculate the projected cumulative return over time. Through simulation, we see that the proposed AC-CIWAS can reduce the workload of the job scheduled with QoS assurance by around 20% decrease compared to existing baseline allocation methods. The report also covers the ways in which the proposed technology could be used in cloud computing and offers suggestions for future study.

Article Details

References

Al-Habob, A. A., Dobre, O. A., Armada, A. G., & Muhaidat, S. (2020). Task scheduling for mobile edge computing using genetic algorithm and conflict graphs. IEEE Transactions on Vehicular Technology, 69(8), 8805–8819.

https://doi.org/10.1109/TVT.2020.2995146

Fernandez-Gauna, B., Graña, M., Osa-Amilibia, J. L., & Larrucea, X. (2022). Actor-critic continuous state reinforcement learning for wind-turbine control robust optimisation. Information Sciences, 591, 365–380. https://doi.org/10.1016/J.INS.2022.01.047

Ferratti, G. M., Sacomano Neto, M., & Candido, S. E. A. (2021). Controversies in an information technology startup: A critical actor-network analysis of the entrepreneurial process. Technology in Society, 66, 101623. https://doi.org/10.1016/J.TECHSOC.2021.101623

Jin, W., Fu, Q., Chen, J., Wang, Y., Liu, L., Lu, Y., & Wu, H. (2023). A novel building energy consumption prediction method uses deep reinforcement learning considering fluctuation points. Journal of Building Engineering, 63. https://doi.org/10.1016/j.jobe.2022.105458

Li, C., Zheng, P., Yin, Y., Wang, B., & Wang, L. (2023). Deep reinforcement learning in smart manufacturing: A review and prospects. CIRP Journal of Manufacturing Science and Technology, 40, 75–101. https://doi.org/10.1016/J.CIRPJ.2022.11.003

Liao, L., Lai, Y., Yang, F., & Zeng, W. (2023). Online computation offloading with double reinforcement learning algorithm in mobile edge computing. Journal of Parallel and Distributed Computing, 171, 28–39. https://doi.org/10.1016/J.JPDC.2022.09.006

Liu, Y., Wang, L., Wang, X. V., Xu, X., & Zhang, L. (2019). Scheduling in cloud manufacturing: state-of-the-art and research challenges. International Journal of Production Research, 57(15–16), 4854–4879. https://doi.org/10.1080/00207543.2018.1449978

Niu, W. jing, Feng, Z. kai, Feng, B. fei, Xu, Y. shan, & Min, Y. wu. (2021). Parallel computing and swarm intelligence-based artificial intelligence model for multi-step-ahead hydrological time series prediction. Sustainable Cities and Society, 66, 102686. https://doi.org/10.1016/J.SCS.2020.102686

Sankalp, S., Sahoo, B. B., & Sahoo, S. N. (2022). Deep learning models comparable assessment and uncertainty analysis for diurnal temperature range (DTR) predictions over Indian urban cities. Results in Engineering, 13. https://doi.org/10.1016/j.rineng.2021.100326

Serrano-Guerrero, X., Briceño-León, M., Clairand, J. M., & Escrivá-Escrivá, G. (2021). A new interval prediction methodology for short-term electric load forecasting based on pattern recognition. Applied Energy, 297. https://doi.org/10.1016/j.apenergy.2021.117173

Sun, J., Zhu, Z., Li, H., Chai, Y., Qi, G., Wang, H., & Hu, Y. H. (2019). An integrated critic-actor neural network for reinforcement learning with application of DERs control in grid frequency regulation. International Journal of Electrical Power & Energy Systems, 111, 286–299. https://doi.org/10.1016/J.IJEPES.2019.04.011

Tang, X., Liu, Y., Deng, T., Zeng, Z., Huang, H., Wei, Q., Li, X., & Yang, L. (2023). A job scheduling algorithm based on parallel workload prediction on the computational grid. Journal of Parallel and Distributed Computing, 171, 88–97. https://doi.org/10.1016/j.jpdc.2022.09.007

Wang, X., Zhang, L., Liu, Y., Li, F., Chen, Z., Zhao, C., & Bai, T. (2022). Dynamic scheduling of tasks in cloud manufacturing with multi-agent reinforcement learning. Journal of Manufacturing Systems, 65, 130–145. https://doi.org/10.1016/J.JMSY.2022.08.004

Wang, X., Zhang, L., Liu, Y., Zhao, C., & Wang, K. (2022a). Solving task scheduling problems in cloud manufacturing via attention mechanism and deep reinforcement learning. Journal of Manufacturing Systems, 65, 452–468. https://doi.org/10.1016/J.JMSY.2022.08.013

Wang, X., Zhang, L., Liu, Y., Zhao, C., & Wang, K. (2022b). Solving task scheduling problems in cloud manufacturing via attention mechanism and deep reinforcement learning. Journal of Manufacturing Systems, 65, 452–468. https://doi.org/10.1016/j.jmsy.2022.08.013

Zhang, D., Wang, S., Liang, Y., & Du, Z. (2023). A novel combined model for probabilistic load forecasting based on deep learning and improved optimiser. Energy, 264. https://doi.org/10.1016/j.energy.2022.126172

Zhang, W., Chen, Q., Yan, J., Zhang, S., & Xu, J. (2021). A novel asynchronous deep reinforcement learning model with adaptive early forecasting method and reward incentive mechanism for short-term load forecasting. Energy, 236, 121492. https://doi.org/10.1016/J.ENERGY.2021.121492

Zhou, L., Zhang, L., & Fang, Y. (2020). Logistics service scheduling with manufacturing provider selection in cloud manufacturing. Robotics and Computer-Integrated Manufacturing, 65. https://doi.org/10.1016/j.rcim.2019.101914

Zhou, L., Zhang, L., Ren, L., & Wang, J. (2019). Real-Time Scheduling of Cloud Manufacturing Services Based on Dynamic Data-Driven Simulation. IEEE Transactions on Industrial Informatics, 15(9), 5042–5051. https://doi.org/10.1109/TII.2019.2894111

Zhu, X., Zhang, F., & Li, H. (2022). Swarm Deep Reinforcement Learning for Robotic Manipulation. Procedia Computer Science, 198, 472–479. https://doi.org/10.1016/J.PROCS.2021.12.272