Prevention in Healthcare: An Explainable AI Approach

Main Article Content

Abstract

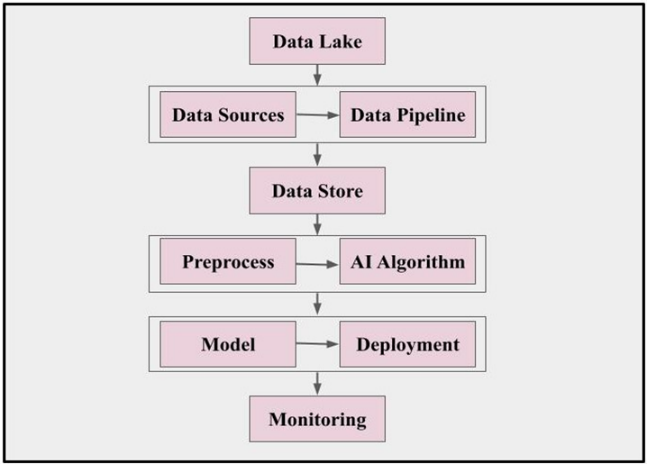

Intrusion prevention is a critical aspect of maintaining the security of healthcare systems, especially in the context of sensitive patient data. Explainable AI can provide a way to improve the effectiveness of intrusion prevention by using machine learning algorithms to detect and prevent security breaches in healthcare systems. This approach not only helps ensure the confidentiality, integrity, and availability of patient data but also supports regulatory compliance. By providing clear and interpretable explanations for its decisions, explainable AI can enable healthcare professionals to understand the reasoning behind the intrusion detection system's alerts and take appropriate action. This paper explores the application of explainable AI for intrusion prevention in healthcare and its potential benefits for maintaining the security of healthcare systems.

Article Details

References

S. R. Islam, W. Eberle, S. K. Ghafoor, A. Siraj, and M. Rogers, “Domain knowledge aided explainable artificial intelligence for intrusion detection and response,” CEUR Workshop Proc., vol. 2600, 2020.

B. Alsinglawi et al., “An explainable machine learning framework for lung cancer hospital length of stay prediction,” Sci. Rep., vol. 12, no. 1, pp. 1–10, 2022, doi: 10.1038/s41598-021-04608-7.

M. S. A. Dwivedi, M. R. P. Borse, and M. A. M. Yametkar, “Lung Cancer detection and Classification by using Machine Learning & Multinomial Bayesian,” IOSR J. Electron. Commun. Eng., vol. 9, no. 1, pp. 69–75, 2014, doi: 10.9790/2834-09136975.

K. Kobyli?ska, T. Or?owski, M. Adamek, and P. Biecek, “Explainable Machine Learning for Lung Cancer Screening Models,” Appl. Sci., vol. 12, no. 4, 2022, doi: 10.3390/app12041926.

G. R. Pathak and S. H. Patil, “Mathematical Model of Security Framework for Routing Layer Protocol in Wireless Sensor Networks,” Phys. Procedia, vol. 78, no. December 2015, pp. 579–586, 2016, doi: 10.1016/j.procs.2016.02.121.

P. R. Chandre, “Intrusion Prevention Framework for WSN using Deep CNN,” vol. 12, no. 6, pp. 3567–3572, 2021.

P. Chandre, P. Mahalle, and G. Shinde, “Intrusion prevention system using convolutional neural network for wireless sensor network,” IAES Int. J. Artif. Intell., vol. 11, no. 2, pp. 504–515, 2022, doi: 10.11591/ijai.v11.i2.pp504-515.

P. R. Chandre, P. N. Mahalle, and G. R. Shinde, “Machine learning based novel approach for intrusion detection and prevention system: a tool based verification,” in 2018 IEEE Global Conference on Wireless Computing and Networking (GCWCN), Nov. 2018, pp. 135–140, doi: 10.1109/GCWCN.2018.8668618.

G. R. Pathak, M. S. G. Premi, and S. H. Patil, “LSSCW: A lightweight security scheme for cluster based Wireless Sensor Network,” Int. J. Adv. Comput. Sci. Appl., vol. 10, no. 10, pp. 448–460, 2019, doi: 10.14569/ijacsa.2019.0101062.

C. Venkatesh, K. Ramana, S. Y. Lakkisetty, S. S. Band, S. Agarwal, and A. Mosavi, “A Neural Network and Optimization Based Lung Cancer Detection System in CT Images,” Front. Public Heal., vol. 10, no. June, pp. 1–9, 2022, doi: 10.3389/fpubh.2022.769692.

M. Marcos et al., Artificial Intelligence in Medicine?: Knowledge Representation and Transparent and Explainable Systems. 2019.

C. Venkatesh and P. Bojja, “Development of qualitative model for detection of lung cancer using optimization,” Int. J. Innov. Technol. Explor. Eng., vol. 8, no. 9, pp. 3143–3147, 2019, doi: 10.35940/ijitee.i8619.078919.

Y. Li, D. Gu, Z. Wen, F. Jiang, and S. Liu, “Classify and explain: An interpretable convolutional neural network for lung cancer diagnosis,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., vol. 2020-May, pp. 1065–1069, 2020, doi: 10.1109/ICASSP40776.2020.9054605.

W. L. Bi et al., “Artificial intelligence in cancer imaging: Clinical challenges and applications,” CA. Cancer J. Clin., vol. 69, no. 2, pp. 127–157, 2019, doi: 10.3322/caac.21552.

B. Mahbooba, M. Timilsina, R. Sahal, and M. Serrano, “Explainable Artificial Intelligence (XAI) to Enhance Trust Management in Intrusion Detection Systems Using Decision Tree Model,” Complexity, vol. 2021, 2021, doi: 10.1155/2021/6634811.

N. Capuano, G. Fenza, V. Loia, and C. Stanzione, “Explainable Artificial Intelligence in CyberSecurity: A Survey,” IEEE Access, vol. 10, no. September, pp. 93575–93600, 2022, doi: 10.1109/ACCESS.2022.3204171.

P. N. Srinivasu, N. Sandhya, R. H. Jhaveri, and R. Raut, “From Blackbox to Explainable AI in Healthcare: Existing Tools and Case Studies,” Mob. Inf. Syst., vol. 2022, 2022, doi: 10.1155/2022/8167821.

Z. A. El Houda, B. Brik, and L. Khoukhi, “‘Why Should I Trust Your IDS?’: An Explainable Deep Learning Framework for Intrusion Detection Systems in Internet of Things Networks,” IEEE Open J. Commun. Soc., vol. 3, no. June, pp. 1164–1176, 2022, doi: 10.1109/OJCOMS.2022.3188750.

M. Rostami and M. Oussalah, “Since January 2020 Elsevier has created a COVID-19 resource centre with free information in English and Mandarin on the novel coronavirus COVID- 19 . The COVID-19 resource centre is hosted on Elsevier Connect , the company ’ s public news and information ,” no. January, 2020.

S. Wali, I. A. Khan, and S. Member, “Explainable AI and Random Forest Based Reliable Intrusion Detection system,” techarXiv, 2021, doi: 10.36227/techrxiv.17169080.v1.

H. Liu, C. Zhong, A. Alnusair, and S. R. Islam, “FAIXID: A Framework for Enhancing AI Explainability of Intrusion Detection Results Using Data Cleaning Techniques,” J. Netw. Syst. Manag., vol. 29, no. 4, pp. 1–30, 2021, doi: 10.1007/s10922-021-09606-8.