PEMO: A New Validated Dataset for Punjabi Speech Emotion Detection

Main Article Content

Abstract

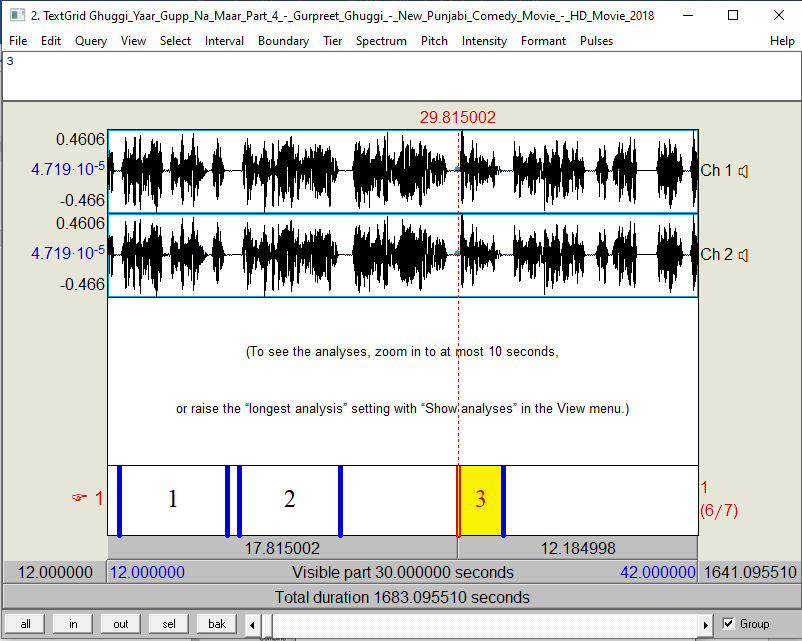

This research work presents a new valid dataset for Punjabi called the Punjabi Emotional Speech Database (PEMO) which has been developed to assess the ability to recognize emotions in speech by both computers and humans. The PEMO includes speech samples from about 60 speakers with an age range between 20 and 45 years, for four fundamental emotions, including anger, sad, happy and neutral. In order to create the data, Punjabi films are retrieved from different multimedia websites such as YouTube. The movies are processed and transformed into utterances with software called PRAAT. The database contains 22,000 natural utterances. This is equivalent to 12 hours and 35 min of speech information taken from online Punjabi movies and web series. Three annotators categorize the emotional content of the utterances. The common label that is labelled by all annotators becomes the final label for the utterance. The annotators have a thorough knowledge of Punjabi Language. The data is used to determine the expression of emotions in speech in the Punjabi Language.

Article Details

References

Huahu, Xu, Gao Jue, and Yuan Jian. "Application of speech emotion recognition in intelligent household robot." In 2010 International Conference on Artificial Intelligence and Computational Intelligence, vol. 1, pp. 537-541. IEEE, 2010.

Dickerson, Robert F., Eugenia I. Gorlin, and John A. Stankovic. "Empath: a continuous remote emotional health monitoring system for depressive illness." In Proceedings of the 2nd Conference on Wireless Health, pp. 1-10. 2011.

Heni, Nazih, and Habib Hamam. "Design of emotional educational system mobile games for autistic children." In 2016 2nd International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), pp. 631-637. IEEE, 2016.

Cowie, Roddy, Ellen Douglas-Cowie, Nicolas Tsapatsoulis, George Votsis, Stefanos Kollias, Winfried Fellenz, and John G. Taylor. "Emotion recognition in human-computer interaction." IEEE Signal processing magazine 18, no. 1 (2001): 32-80.

Schuller, Björn, Gerhard Rigoll, and Manfred Lang. "Speech emotion recognition combining acoustic features and linguistic information in a hybrid support vector machine-belief network architecture." In 2004 IEEE international conference on acoustics, speech, and signal processing, vol. 1, pp. I-577. IEEE, 2004.

Kort, Barry, Rob Reilly, and Rosalind W. Picard. "An affective model of interplay between emotions and learning: Reengineering educational pedagogy-building a learning companion." In Proceedings IEEE international conference on advanced learning technologies, pp. 43-46. IEEE, 2001.

Nuria Rabanal, & Prof. Dharmesh Dhabliya. (2022). Designing Architecture of Embedded System Design using HDL Method. Acta Energetica, (02), 52–58. Retrieved from http://actaenergetica.org/index.php/journal/article/view/469

Busso, Carlos, Murtaza Bulut, Shrikanth Narayanan, J. Gratch, and S. Marsella. "Toward effective automatic recognition systems of emotion in speech." Social emotions in nature and artifact: emotions in human and human-computer interaction, J. Gratch and S. Marsella, Eds (2013): 110-127.

Feraru, Silvia Monica, and Dagmar Schuller. "Cross-language acoustic emotion recognition: An overview and some tendencies." In 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), pp. 125-131. IEEE, 2015.

Sagha, Hesam, Pavel Matejka, Maryna Gavryukova, Filip Povolný, Erik Marchi, and Björn Schuller. "Enhancing multilingual recognition of emotion in speech by language identification." (2016).

Anatoliy Goncharuk, & Ahmed F. Mohamed. (2022). Analytical Approach of Laboratory for the Microelectronics Fabrication. Acta Energetica, (02), 08–14. Retrieved from http://actaenergetica.org/index.php/journal/article/view/463

Kaur, Kamaldeep, and Parminder Singh. "Punjabi emotional speech database: design, recording and verification." International Journal of Intelligent Systems and Applications in Engineering 9, no. 4 (2021): 205-208.

Singla, Chaitanya, and Sukhdev Singh. "Punjabi Speech Emotion Recognition using Prosodic, Spectral and Wavelet Features." In 2022 10th International Conference on Emerging Trends in Engineering and Technology-Signal and Information Processing (ICETET-SIP-22), pp. 1-6. IEEE, 2022.

El Ayadi, Moataz, Mohamed S. Kamel, and Fakhri Karray. "Survey on speech emotion recognition: Features, classification schemes, and databases." Pattern recognition 44, no. 3 (2011): 572-587.

Singla, Chaitanya, Sukhdev Singh, and Monika Pathak. "Automatic Audio Based Emotion Recognition System: Scope and Challenges." In Proceedings of the International Conference on Innovative Computing & Communications (ICICC). 2020.

Singla, Chaitanya, and Sukhdev Singh. "Databases, Classifiers for Speech Emotion Recognition: A Review." (2019).

Fahad, Md Shah, Ashish Ranjan, Jainath Yadav, and Akshay Deepak. "A survey of speech emotion recognition in natural environment." Digital Signal Processing 110 (2021): 102951.

Abbaschian, Babak Joze, Daniel Sierra-Sosa, and Adel Elmaghraby. "Deep learning techniques for speech emotion recognition, from databases to models." Sensors 21, no. 4 (2021): 1249.

Douglas-Cowie, Ellen, Roddy Cowie, and Marc Schröder. "A new emotion database: considerations, sources and scope." In ISCA tutorial and research workshop (ITRW) on speech and emotion. 2000.

Burkhardt, Felix, Astrid Paeschke, Miriam Rolfes, Walter F. Sendlmeier, and Benjamin Weiss. "A database of German emotional speech." In Interspeech, vol. 5, pp. 1517-1520. 2005.

Engberg, Inger S., Anya Varnich Hansen, Ove Andersen, and Paul Dalsgaard. "Design, recording and verification of a Danish emotional speech database." In Fifth European conference on speech communication and technology. 1997.

Livingstone, Steven R., and Frank A. Russo. "The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English." PloS one 13, no. 5 (2018): e0196391.

Dupuis, Kate, and M. Kathleen Pichora-Fuller. "Recognition of emotional speech for younger and older talkers: Behavioural findings from the toronto emotional speech set." Canadian Acoustics 39, no. 3 (2011): 182-183.

Cao, Houwei, David G. Cooper, Michael K. Keutmann, Ruben C. Gur, Ani Nenkova, and Ragini Verma. "Crema-d: Crowd-sourced emotional multimodal actors dataset." IEEE transactions on affective computing 5, no. 4 (2014): 377-390.

Busso, Carlos, Murtaza Bulut, Chi-Chun Lee, Abe Kazemzadeh, Emily Mower, Samuel Kim, Jeannette N. Chang, Sungbok Lee, and Shrikanth S. Narayanan. "IEMOCAP: Interactive emotional dyadic motion capture database." Language resources and evaluation 42, no. 4 (2008): 335-359.

Sneddon, Ian, Margaret McRorie, Gary McKeown, and Jennifer Hanratty. "The belfast induced natural emotion database." IEEE Transactions on Affective Computing 3, no. 1 (2011): 32-41.

Gnjatovi?, Milan, and Dietmar Rösner. "Inducing genuine emotions in simulated speech-based human-machine interaction: The nimitek corpus." IEEE Transactions on Affective Computing 1, no. 2 (2010): 132-144.

Grimm, Michael, Kristian Kroschel, and Shrikanth Narayanan. "The Vera am Mittag German audio-visual emotional speech database." In 2008 IEEE international conference on multimedia and expo, pp. 865-868. IEEE, 2008.

Steidl, Stefan. Automatic classification of emotion related user states in spontaneous children's speech. Berlin, Germany: Logos-Verlag, 2009.

Morrison, Donn, Ruili Wang, and Liyanage C. De Silva. "Ensemble methods for spoken emotion recognition in call-centres." Speech communication 49, no. 2 (2007): 98-112.

Lee, Chul Min, and Shrikanth S. Narayanan. "Toward detecting emotions in spoken dialogs." IEEE transactions on speech and audio processing 13, no. 2 (2005): 293-303.