Sentence Semantic Similarity based Complex Network approach for Word Sense Disambiguation

Main Article Content

Abstract

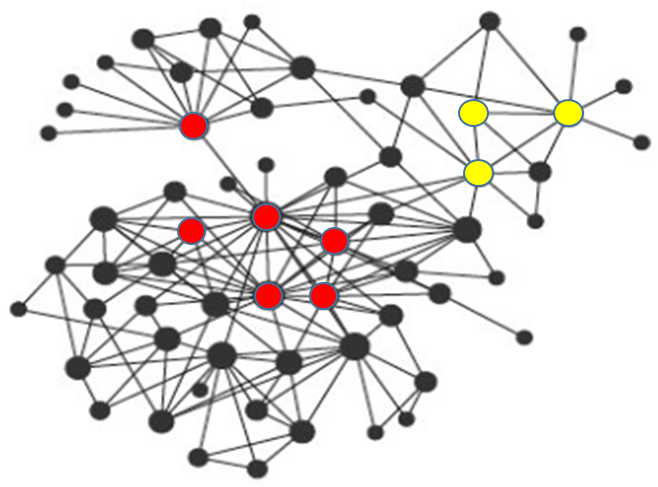

Word Sense Disambiguation is a branch of Natural Language Processing(NLP) that deals with multi-sense words. The multi-sense words are referred to as the polysemous words. The term lexical ambiguity is introduced by the multi-sense words. The existing sense disambiguation module works effectively for single sentences with available context information. The word embedding plays a vital role in the process of disambiguation. The context-dependent word embedding model is used for disambiguation. The main goal of this research paper is to disambiguate the polysemous words by considering available context information. The main identified challenge of disambiguation is the ambiguous word without context information. The discussed complex network approach is disambiguating ambiguous sentences by considering the semantic similarities. The sentence semantic similarity-based network is constructed for disambiguating ambiguous sentences. The proposed methodology is trained with SemCor, Adaptive-Lex, and OMSTI standard lexical resources. The findings state that the discussed methodology is working fine for disambiguating large documents where the sense of ambiguous sentences is on the adjacent sentences.

Article Details

References

Correa Jr, Edilson A., Alneu A. Lopes, and Diego R. Amancio. "Word sense disambiguation: A complex network approach." Information Sciences 442 (2018): 103-113.

Veronis, Jean, and Nancy Ide. "Word sense disambiguation with very large neural networks extracted from machine readable dictionaries." COLING 1990 Volume 2: Papers presented to the 13th International Conference on Computational Linguistics. 1990.

Kokane, Chandrakant, et al. "Word Sense Disambiguation: A Supervised Semantic Similarity based Complex Network Approach." International Journal of Intelligent Systems and Applications in Engineering 10.1s (2022): 90-94.

Kokane, Chandrakant D., Sachin D. Babar, and Parikshit N. Mahalle. "Word Sense Disambiguation for Large Documents Using Neural Network Model." 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT). IEEE, 2021.

Salton, Gerard, Anita Wong, and Chung-Shu Yang. "A vector space model for automatic indexing." Communications of the ACM 18.11 (1975): 613-620.

Verma, Vijay, and Rajesh Kumar Aggarwal. "A comparative analysis of similarity measures akin to the Jaccard index in collaborative recommendations: empirical and theoretical perspective." Social Network Analysis and Mining 10 (2020): 1-16.

Mikolov, Tomas, et al. "Efficient estimation of word representations in vector space." arXiv preprint arXiv:1301.3781 (2013).

Pennington, Jeffrey, Richard Socher, and Christopher D. Manning. "Glove: Global vectors for word representation." Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP). 2014.

Bojanowski, Piotr, et al. "Enriching word vectors with subword information." Transactions of the association for computational linguistics 5 (2017): 135-146.

Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018).

Le, Quoc, and Tomas Mikolov. "Distributed representations of sentences and documents." International conference on machine learning. PMLR, 2014.