A Hybrid Optimization Approach for Neural Machine Translation Using LSTM+RNN with MFO for Under Resource Language (Telugu)

Main Article Content

Abstract

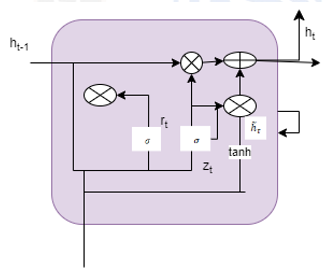

NMT (Neural Machine Translation) is an innovative approach in the field of machine translation, in contrast to SMT (statistical machine translation) and Rule-based techniques which has resulted annotable improvements. This is because NMT is able to overcome many of the shortcomings that are inherent in the traditional approaches. The Development of NMT has grown tremendously in the recent years but NMT performance remain under optimal when applied to low resource language pairs like Telugu, Tamil and Hindi. In this work a proposedmethod fortranslating pairs (Telugu to English) is attempted, an optimal approach which enhancesthe accuracy and execution time period.A hybrid method approach utilizing Long short-term memory (LSTM) and traditional Recurrent Neural Network (RNN) are used for testing and training of the dataset. In the event of long-range dependencies, LSTM will generate more accurate results than a standard RNN would endure and the hybrid technique enhances the performance of LSTM. LSTM is used during the encoding and RNN is used in decoding phases of NMT. Moth Flame Optimization (MFO) is utilized in the proposed system for the purpose of providing the encoder and decoder model with the best ideal points for training the data.

Article Details

References

Madankar, M.; Chandak, M.B.; Chavhan, N. Information retrieval system and machine translation: A review. Procedia Comput. Sci. 2016, 78, 845–850.

Kenny, D. Machine translation. In The Routledge Handbook of Translation and Philosophy; Routledge: Oxfordshire, UK, 2018; pp. 428–445.

Bahdanau, D.; Cho, K.H.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv2014, arXiv:1409.0473.

Forcada, M.L. Making sense of neural machine translation. Transl. Spaces 2017, 6, 291–309.

Nekoto, W.; Marivate, V.; Matsila, T.; Fasubaa, T.; Kolawole, T.; Fagbohungbe, T.; Akinola, S.O.; Muhammad, S.H.; Kabongo, S.; Osei, S.; et al. Participatory research for low-resourced machine translation: A case study in african languages. arXiv2020, arXiv:2010.02353.

Freitag, M.; Firat, O. Complete multilingual neural machine translation. arXiv2020, arXiv:2010.10239.

Ahmadnia, B.; Dorr, B.J. Augmenting neural machine translation through round-trip training approach. Open Comput. Sci. 2019, 9, 268–278.

Johnson, M.; Schuster, M.; Le, Q.V.; Krikun, M.; Wu, Y.; Chen, Z.; Thorat, N.; Viégas, F.; Wattenberg, M.; Corrado, G.; et al. Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation. Trans. Assoc. Comput. Linguist. 2017, 5, 339–351.

Kapil Sharma, Rajiv Khosla, Yogesh Kumar. (2023). Application of Morgan and Krejcie & Chi-Square Test with Operational Errands Approach for Measuring Customers’ Attitude & Perceived Risks Towards Online Buying. International Journal of Intelligent Systems and Applications in Engineering, 11(3s), 280–285. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2685

Aharoni, R.; Johnson, M.; Firat, O. Massively multilingual neural machine translation. arXiv2019, arXiv:1903.00089.

Koehn, P.; Knowles, R. Six challenges for neural machine translation. arXiv2017, arXiv:1706.03872.

Lakew, S.M.; Federico, M.; Negri, M.; Turchi, M. Multilingual neural machine translation for low-resource languages. Ital. J. Comput. 2018, 4, 11–25.

Zoph, B.; Yuret, D.; May, J.; Knight, K. Transfer learning for low-resource neural machine translation. arXiv2016, arXiv:1604.02201.

Goyal, V.; Kumar, S.; Sharma, D.M. Effificient neural machine translation for low-resource languages via exploiting related languages. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Online, 5–10 July 2020; pp. 162–168.

Chowdhury, K.D.; Hasanuzzaman, M.; Liu, Q. Multimodal neural machine translation for low-resource language pairs using synthetic data. In Proceedings of the Workshop on Deep Learning Approaches for Low-Resource NLP, Melbourne, Australia, 19 July 2018; pp. 33–42.

Fadaee, M.; Bisazza, A.; Monz, C. Data augmentation for low-resource neural machine translation. arXiv2017, arXiv:1705.00440.

Imankulova, A.; Sato, T.; Komachi, M. Improving low-resource neural machine translation with fifiltered pseudo-parallel corpus. In Proceedings of the 4th Workshop on Asian Translation (WAT2017), Taipei, Taiwan, 27 November–1 December 2017; pp. 70–78.

Gu, J.; Wang, Y.; Chen, Y.; Cho, K.; Li, V.O.K. Meta-learning for low-resource neural machine translation. arXiv2018, arXiv:1808.08437.

Ueffifing, N.; Haffari, G.; Sarkar, A. Transductive learning for statistical machine translation. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Prague, Czech Republic, 25–27 June 2007; pp. 25–32.

Tonja, A.L.; Woldeyohannis, M.M.; Yigezu, M.G. A Parallel Corpora for bi-directional Neural Machine Translation for Low Resourced Ethiopian Languages. In Proceedings of the 2021 International Conference on Information and Communication Technology for Development for Africa (ICT4DA), Bahir Dar, Ethiopia, 22–24 November 2021; pp. 71–76.

Laskar, S.R.; Paul, B.; Adhikary, P.K.; Pakray, P.; Bandyopadhyay, S. Neural Machine Translation for Tamil–Telugu Pair. In Proceedings of the Sixth Conference on Machine Translation, Online, 10–11 November 2021; pp. 284–287.

Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543.

Marie, B.; Fujita, A. Synthesizing Monolingual Data for Neural Machine Translation. arXiv2021, arXiv:2101.12462.

Tars, M.; Tättar, A.; Fišel, M. Extremely low-resource machine translation for closely related languages. arXiv2021, arXiv:2105.13065.

Sennrich, R.; Haddow, B.; Birch, A. Improving neural machine translation models with monolingual data. arXiv2015, arXiv:1511.06709.

Jiao, W.; Wang, X.; Tu, Z.; Shi, S.; Lyu, M.R.; King, I. Self-training sampling with monolingual data uncertainty for neural machine translation. arXiv2021, arXiv:2106.00941.

Dione, C.M.B.; Lo, A.; Nguer, E.M.; Ba, S. Low-resource Neural Machine Translation: Benchmarking State-of-the-art Transformer for Wolof<-> French. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 6654–6661.

Pham, N.L. Data Augmentation for English-Vietnamese Neural Machine Translation: An Empirical Study. Available online:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4216607.

Ngo, T.-V.; Nguyen, P.-T.; Nguyen, V.V.; Ha, T.-L.; Nguyen, L.-M. An Efficient Method for Generating Synthetic Data for LowResource Machine Translation: An empirical study of Chinese, Japanese to Vietnamese Neural Machine Translation. Appl. Artif. Intell. 2022, 36, 2101755.