Integrated Approach for Emotion Detection via Speech and Text Analysis

Main Article Content

Abstract

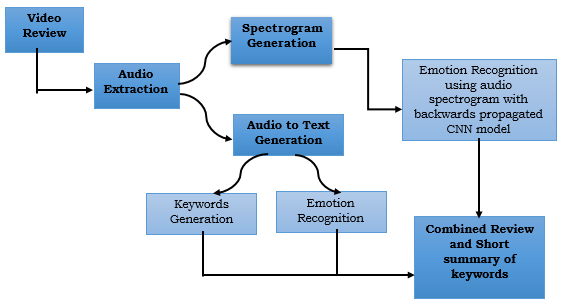

This paper aims to provide a comprehensive solution for effective reviews using deep learning models. Customers often have difficulty to find accurate reviews of the things they are interested in. The proposed framework implements a review mechanism to address this problem, which will give customers relevant reviews based on video reviews supplied in the product description. The goal of this system is to turn video reviews into a particular rating so that viewers may get a summary of the review without having to watch the full thing by simply glancing at the rating. Deep learning neural networks are used by the model for both text and audio processing in order to achieve this. The well-known RAVDESS dataset serves as the basis for the audio model's training and offers a wide range of emotional expressions. The suggested system uses two methods to provide reviews: text-based natural language processing and audio frequency spectrograms. By utilizing these two techniques, it may provide consumers accurate and trustworthy ratings while guaranteeing that the review procedure is not impeded. The aim is achieved with high accuracy to ensure that users can make informed decisions when purchasing products based on the provided reviews. With the aid of this review system, customers will be able to quickly find out crucial details about a product they are interested in, thus increasing their pleasure and loyalty.

Article Details

References

Shiqing Zhang , Shiliang Zhang , Member, IEEE, Tiejun Huang , Senior Member, IEEE, and Wen Gao, Fellow, Speech Emotion Recognition Using Deep Convolutional Neural Net- work and Discriminant Temporal Pyramid Matching IEEE TRANSACTIONS ON MULTIMEDIA, VOL. 20, NO. 6, JUNE 2018

M. E. Seknedy and S. Fawzi, "Speech Emotion Recognition System for Human Interaction Applications," 2021 Tenth International Conference on Intelligent Computing and Infor- mation Systems (ICICIS), 2021, pp. 361-368, doi: 10.1109/ICICIS52592.2021.9694246

JORGE OLIVEIRA AND ISABEL PRAÇA On the Usage of Pre-Trained Speech Recog- nition Deep Layers to Detect Emotions Received December 22, 2020, accepted January 1, 2021, date of publication January 12, 2021, date of current version January 19, 2021. Digi- tal Object Identifier 10.1109/ACCESS.2021.3051083

Sabrine Dhaouadi,Hedi Abdelkrim,Slim Ben Saoud Speech Emotion Recognition: Models Implementation & Evaluation

M. Gokilavani, H. Katakam, S. A. Basheer and P. Srinivas, "Ravdness, Crema-D, Tess Based Algorithm for Emotion Recognition Using Speech," 2022 4th International Confer- ence on Smart Systems and Inventive Technology (ICSSIT), 2022, pp. 1625-1631, doi: 10.1109/ICSSIT53264.2022.9716313

X. Liu, Y. Mou, Y. Ma, C. Liu and Z. Dai, "Speech Emotion Detection Using Sliding Window Feature Extraction and ANN," 2020 IEEE 5th International Conference on Signaland Image Processing (ICSIP), 2020, pp. 746-750, doi: 10.1109/ICSIP49896.2020.9339340

SUDARSANA REDDY KADIRI , (Member, IEEE), AND PAAVO ALKU , (Fellow, IEEE) Received March 5, 2020, Excitation Features of Speech for Speaker Specific Emotion Detection accepted March 20, 2020, date of publication March 24, 2020, date of cur- rent version April 9, 2020. Digital Object Identifier 10.1109/ACCESS.2020.2982954

REZA LOTFIAN , (Student Member, IEEE), AND CARLOS BUSSO , (Senior Member, IEEE) , Lexical Dependent Emotion Detection Using Synthetic Speech ceived January 14, 2019, accepted January 29, 2019, date of publication February 8, 2019, date of current version March 1, 2019. Digital Object Identifier 10.1109/ACCESS.2019.2898353

Tyagi, R. ., K. Shastri, R. ., M., K. ., Ramkumar Prabhu, M. ., Laavanya, M. ., & C. Pawar, U. . (2023). Undecimated Wavelet Transform Technique for the Security Improvement In the Medical Images for the Atatck Prevention. International Journal of Intelligent Systems and Applications in Engineering, 11(3s), 211–217. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2563

S. Prasomphan, "Detecting human emotion via speech recognition by using speech spectrogram," 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA), 2015, pp. 1-10, doi: 10.1109/DSAA.2015.7344793.

Kun-Yi Huang, Chung-Hsien Wu, Qian-Bei Hong, Ming-Hsiang Su and Yi-Hsuan Chen SPEECH EMOTION RECOGNITION USING DEEP NEURAL NETWORK CONSIDERING VERBAL AND NONVERBAL SPEECH SOUNDS

Stevens, S. S., Volkmann, J., & Newman, E. B. (1937). A scale for the measurement of the psychological magnitude pitch. Journal of the Acoustical Society of America, 8, 185–190

R. Cowie et al., “Emotion recognition in human-computer interaction,” IEEE Signal Process. Mag., vol. 18, no. 1, pp. 32–80, Jan. 2001.

Andrew Hernandez, Stephen Wright, Yosef Ben-David, Rodrigo Costa, David Botha. Optimizing Resource Allocation using Machine Learning in Decision Science. Kuwait Journal of Machine Learning, 2(3). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/195

S. Ramakrishnan and I. M. El Emary, “Speech emotion recognition approaches in human computer interaction,” Telecommun. Syst., vol. 52, no. 3, pp. 1467–1478, 2013.

M. El Ayadi, M. S. Kamel, and F. Karray, “Survey on speech emotion recognition: features, classification schemes, and databases,” Pattern Recogn., vol. 44, no. 3, pp. 572–587, 2011.

C.-N. Anagnostopoulos, T. Iliou, and I. Giannoukos, “Features and classifiers for emotion recognition from speech: A survey from 2000 to 2011,” Artif. Intell. Rev., vol. 43, no. 2, pp. 155–177, 2015.

F. Eyben et al., “The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing,” IEEE Trans. Affect. Comput., vol. 7, no. 2, pp. 190–202, Apr.–Jun. 2016

G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” Science, vol. 313, no. 5786, pp. 504–507, 2006.

A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Proc. Adv. Neural Inf. Process. Syst., 2012, pp. 1097–1105.

Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278– 2324, Nov. 1998

Mr. Ather Parvez Abdul Khalil. (2012). Healthcare System through Wireless Body Area Networks (WBAN) using Telosb Motes. International Journal of New Practices in Management and Engineering, 1(02), 01 - 07. Retrieved from http://ijnpme.org/index.php/IJNPME/article/view/4

R. Govindwar et al., "Blockchain Powered Skill Verification System," 2023 International Conference for Advancement in Technology (ICONAT), Goa, India, 2023, pp. 1-8, doi: 10.1109/ICONAT57137.2023.10080848

Khetani, V. ., Gandhi, Y. ., Bhattacharya, S. ., Ajani, S. N. ., & Limkar, S. (2023). Cross-Domain Analysis of ML and DL: Evaluating their Impact in Diverse Domains. International Journal of Intelligent Systems and Applications in Engineering, 11(7s), 253–262.