Hidden Markov Model Deep Learning Architecture for Virtual Reality Assessment to Compute Human–Machine Interaction-Based Optimization Model

Main Article Content

Abstract

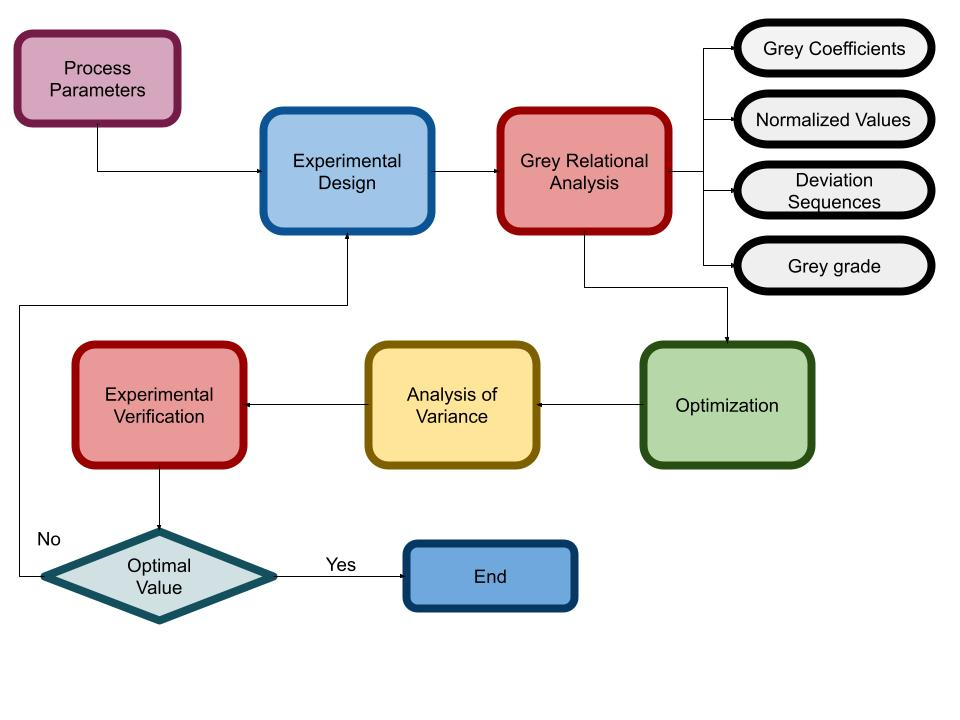

Virtual Reality (VR) is a technology that immerses users in a simulated, computer-generated environment. It creates a sense of presence, allowing individuals to interact with and experience virtual worlds. Human-Machine Interaction (HMI) refers to the communication and interaction between humans and machines. Optimization plays a crucial role in Virtual Reality (VR) and Human-Machine Interaction (HMI) to enhance the overall user experience and system performance. This paper proposed an architecture of the Hidden Markov Model with Grey Relational Analysis (GRA) integrated with Salp Swarm Algorithm (SSA) for the automated Human-Machine Interaction. The proposed architecture is stated as a Hidden Markov model Grey Relational Salp Swarm (HMM_ GRSS). The proposed HMM_GRSS model estimates the feature vector of the variables in the virtual reality platform and compute the feature spaces. The HMM_GRSS architecture aims to estimate the feature vector of variables within the VR platform and compute the feature spaces. Hidden Markov Models are used to model the temporal behavior and dynamics of the system, allowing for predictions and understanding of the interactions. Grey Relational Analysis is employed to evaluate the relationship and relevance between variables, aiding in feature selection and optimization. The SSA helps optimize the feature spaces by simulating the collective behavior of salp swarms, improving the efficiency and effectiveness of the HMI system. The proposed HMM_GRSS architecture aims to enhance the automated HMI process in a VR platform, allowing for improved interaction and communication between humans and machines. Simulation analysis provides a significant outcome for the proposed HMM_GRSS model for the estimation Human-Machine Interaction.

Article Details

References

Guo, L., Lu, Z., & Yao, L. (2021). Human-machine interaction sensing technology based on hand gesture recognition: A review. IEEE Transactions on Human-Machine Systems, 51(4), 300-309.

He, F., You, X., Wang, W., Bai, T., Xue, G., & Ye, M. (2021). Recent progress in flexible microstructural pressure sensors toward human–machine interaction and healthcare applications. Small Methods, 5(3), 2001041.

Onnasch, L., & Roesler, E. (2021). A taxonomy to structure and analyze human–robot interaction. International Journal of Social Robotics, 13(4), 833-849.

Bonci, A., Cen Cheng, P. D., Indri, M., Nabissi, G., & Sibona, F. (2021). Human-robot perception in industrial environments: A survey. Sensors, 21(5), 1571.

Mathis, F., Vaniea, K., & Khamis, M. (2021, May). Replicueauth: Validating the use of a lab-based virtual reality setup for evaluating authentication systems. In Proceedings of the 2021 chi conference on human factors in computing systems (pp. 1-18).

Agbo, F. J., Sanusi, I. T., Oyelere, S. S., & Suhonen, J. (2021). Application of virtual reality in computer science education: A systemic review based on bibliometric and content analysis methods. Education Sciences, 11(3), 142.

Gorman, C., & Gustafsson, L. (2022). The use of augmented reality for rehabilitation after stroke: a narrative review. Disability and rehabilitation: assistive technology, 17(4), 409-417.

Kashef, M., Visvizi, A., & Troisi, O. (2021). Smart city as a smart service system: Human-computer interaction and smart city surveillance systems. Computers in Human Behavior, 124, 106923.

Luo, Y., Wang, Z., Wang, J., Xiao, X., Li, Q., Ding, W., & Fu, H. Y. (2021). Triboelectric bending sensor based smart glove towards intuitive multi-dimensional human-machine interfaces. Nano Energy, 89, 106330.

Al-Yacoub, A., Zhao, Y. C., Eaton, W., Goh, Y. M., & Lohse, N. (2021). Improving human robot collaboration through Force/Torque based learning for object manipulation. Robotics and Computer-Integrated Manufacturing, 69, 102111.

Ovur, S. E., Zhou, X., Qi, W., Zhang, L., Hu, Y., Su, H., ... & De Momi, E. (2021). A novel autonomous learning framework to enhance sEMG-based hand gesture recognition using depth information. Biomedical Signal Processing and Control, 66, 102444.

Kido, D., Fukuda, T., & Yabuki, N. (2021). Assessing future landscapes using enhanced mixed reality with semantic segmentation by deep learning. Advanced Engineering Informatics, 48, 101281.

Selvaggio, M., Cognetti, M., Nikolaidis, S., Ivaldi, S., & Siciliano, B. (2021). Autonomy in physical human-robot interaction: A brief survey. IEEE Robotics and Automation Letters, 6(4), 7989-7996.

Theodoropoulos, A., & Lepouras, G. (2021). Augmented Reality and programming education: A systematic review. International Journal of Child-Computer Interaction, 30, 100335.

Gaikwad, S. Y. ., & Bombade, B. R. . (2023). Energy Enhancement in Wireless Sensor Network Using Teaching Learning based Optimization Algorithm. International Journal of Intelligent Systems and Applications in Engineering, 11(2s), 52–60. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2507

Cho, Y., & Kim, J. (2021). Production of mobile english language teaching application based on text interface using deep learning. Electronics, 10(15), 1809.

Tai, Y., Gao, B., Li, Q., Yu, Z., Zhu, C., & Chang, V. (2021). Trustworthy and intelligent covid-19 diagnostic iomt through xr and deep-learning-based clinic data access. IEEE Internet of Things Journal, 8(21), 15965-15976.

Zhang, R., Lv, Q., Li, J., Bao, J., Liu, T., & Liu, S. (2022). A reinforcement learning method for human-robot collaboration in assembly tasks. Robotics and Computer-Integrated Manufacturing, 73, 102227.

Zhou, Y., Lu, Y., & Pei, Z. (2021). Intelligent diagnosis of Alzheimer's disease based on internet of things monitoring system and deep learning classification method. Microprocessors and Microsystems, 83, 104007.

Park, K. B., Choi, S. H., Lee, J. Y., Ghasemi, Y., Mohammed, M., & Jeong, H. (2021). Hands-free human–robot interaction using multimodal gestures and deep learning in wearable mixed reality. IEEE Access, 9, 55448-55464.

Choi, S. H., Park, K. B., Roh, D. H., Lee, J. Y., Mohammed, M., Ghasemi, Y., & Jeong, H. (2022). An integrated mixed reality system for safety-aware human-robot collaboration using deep learning and digital twin generation. Robotics and Computer-Integrated Manufacturing, 73, 102258.

Khan, M. A., Israr, S., S Almogren, A., Din, I. U., Almogren, A., & Rodrigues, J. J. (2021). Using augmented reality and deep learning to enhance Taxila Museum experience. Journal of Real-Time Image Processing, 18, 321-332.

Kohli, V., Tripathi, U., Chamola, V., Rout, B. K., & Kanhere, S. S. (2022). A review on Virtual Reality and Augmented Reality use-cases of Brain Computer Interface based applications for smart cities. Microprocessors and Microsystems, 88, 104392.

Shriram, S., Nagaraj, B., Jaya, J., Shankar, S., & Ajay, P. (2021). Deep learning-based real-time AI virtual mouse system using computer vision to avoid COVID-19 spread. Journal of healthcare engineering, 2021.

Wang, S., Zargar, S. A., & Yuan, F. G. (2021). Augmented reality for enhanced visual inspection through knowledge-based deep learning. Structural Health Monitoring, 20(1), 426-442.

Shi, L., Li, B., Kim, C., Kellnhofer, P., & Matusik, W. (2021). Towards real-time photorealistic 3D holography with deep neural networks. Nature, 591(7849), 234-239.

Sungheetha, A., & Sharma, R. (2021). 3D image processing using machine learning based input processing for man-machine interaction. Journal of Innovative Image Processing (JIIP), 3(01), 1-6.

Hjorth, S., & Chrysostomou, D. (2022). Human–robot collaboration in industrial environments: A literature review on non-destructive disassembly. Robotics and Computer-Integrated Manufacturing, 73, 102208.

Li, S., Wang, R., Zheng, P., & Wang, L. (2021). Towards proactive human–robot collaboration: A foreseeable cognitive manufacturing paradigm. Journal of Manufacturing Systems, 60, 547-552.

Su, H., Qi, W., Li, Z., Chen, Z., Ferrigno, G., & De Momi, E. (2021). Deep neural network approach in EMG-based force estimation for human–robot interaction. IEEE Transactions on Artificial Intelligence, 2(5), 404-412.

Baroroh, D. K., Chu, C. H., & Wang, L. (2021). Systematic literature review on augmented reality in smart manufacturing: Collaboration between human and computational intelligence. Journal of Manufacturing Systems, 61, 696-711.

Mukherjee, D., Gupta, K., Chang, L. H., & Najjaran, H. (2022). A survey of robot learning strategies for human-robot collaboration in industrial settings. Robotics and Computer-Integrated Manufacturing, 73, 102231.