Multi-Network Feature Fusion Facial Emotion Recognition using Nonparametric Method with Augmentation

Main Article Content

Abstract

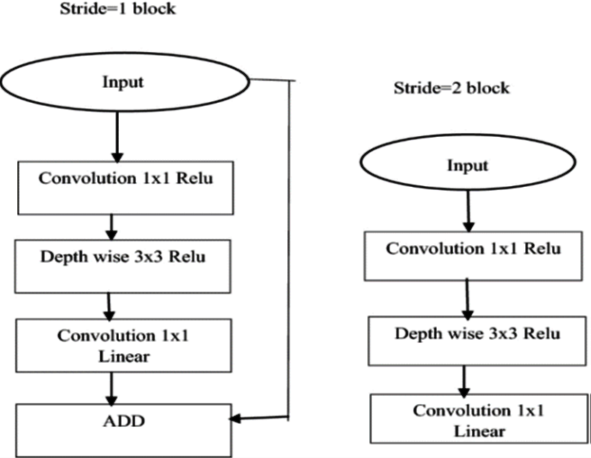

Facial expression emotion identification and prediction is one of the most difficult problems in computer science. Pre-processing and feature extraction are crucial components of the more conventional methods. For the purpose of emotion identification and prediction using 2D facial expressions, this study targets the Face Expression Recognition dataset and shows the real implementation or assessment of learning algorithms such as various CNNs. Due to its vast potential in areas like artificial intelligence, emotion detection from facial expressions has become an essential requirement. Many efforts have been done on the subject since it is both a challenging and fascinating challenge in computer vision. The focus of this study is on using a convolutional neural network supplemented with data to build a facial emotion recognition system. This method may use face images to identify seven fundamental emotions, including anger, contempt, fear, happiness, neutrality, sadness, and surprise. As well as improving upon the validation accuracy of current models, a convolutional neural network that takes use of data augmentation, feature fusion, and the NCA feature selection approach may assist solve some of their drawbacks. Researchers in this area are focused on improving computer predictions by creating methods to read and codify facial expressions. With deep learning's striking success, many architectures within the framework are being used to further the method's efficacy. We highlight the contributions dealt with, the architecture and databases used, and demonstrate the development by contrasting the offered approaches and the outcomes produced. The purpose of this study is to aid and direct future researchers in the subject by reviewing relevant recent studies and offering suggestions on how to further the field. An innovative feature-based transfer learning technique is created using the pre-trained networks MobileNetV2 and DenseNet-201. The suggested system's recognition rate is 75.31%, which is a significant improvement over the results of the prior feature fusion study.

Article Details

References

F. Noroozi, M. Marjanovic, A. Njegus, S. Escalera, and G. Anbarjafari, “Audio-Visual Emotion Recognition in Video Clips,” IEEE Trans. Affect. Comput., vol. 10, no. 1, pp. 60–75, 2019, doi: 10.1109/TAFFC.2017.2713783.

C. Shorten and T. M. Khoshgoftaar, “A survey on Image Data Augmentation for Deep Learning,” J. Big Data, vol. 6, no. 1, 2019, doi: 10.1186/s40537-019-0197-0.

B. J. Park, C. Yoon, E. H. Jang, and D. H. Kim, “Physiological signals and recognition of negative emotions,” Int. Conf. Inf. Commun. Technol. Converg. ICT Converg. Technol. Lead. Fourth Ind. Revolution, ICTC 2017, vol. 2017-Decem, pp. 1074–1076, 2017, doi: 10.1109/ICTC.2017.8190858.

R. M. Mehmood, R. Du, and H. J. Lee, “Optimal feature selection and deep learning ensembles method for emotion recognition from human brain EEG sensors,” IEEE Access, vol. 5, no. c, pp. 14797–14806, 2017, doi: 10.1109/ACCESS.2017.2724555.

S. Li and W. Deng, “Deep Facial Expression Recognition: A Survey,” IEEE Trans. Affect. Comput., vol. 13, no. 3, pp. 1195–1215, 2022, doi: 10.1109/TAFFC.2020.2981446.

X. Wang, Y. Zhao, and F. Pourpanah, “Recent advances in deep learning,” Int. J. Mach. Learn. Cybern., vol. 11, no. 4, pp. 747–750, 2020, doi: 10.1007/s13042-020-01096-5.

E. Owusu, J. A. Kumi, and J. K. Appati, “On Facial Expression Recognition Benchmarks,” Appl. Comput. Intell. Soft Comput., vol. 2021, 2021, doi: 10.1155/2021/9917246.

L. Chen, M. Zhou, W. Su, M. Wu, J. She, and K. Hirota, “Softmax regression based deep sparse autoencoder network for facial emotion recognition in human-robot interaction,” Inf. Sci. (Ny)., vol. 428, pp. 49–61, 2018, doi: 10.1016/j.ins.2017.10.044.

A. Hassouneh, A. M. Mutawa, and M. Murugappan, “Development of a Real-Time Emotion Recognition System Using Facial Expressions and EEG based on machine learning and deep neural network methods,” Informatics Med. Unlocked, vol. 20, p. 100372, 2020, doi: 10.1016/j.imu.2020.100372.

C. Tan, M. Šarlija, and N. Kasabov, “NeuroSense: Short-term emotion recognition and understanding based on spiking neural network modelling of spatio-temporal EEG patterns,” Neurocomputing, vol. 434, pp. 137–148, 2021, doi: 10.1016/j.neucom.2020.12.098.

V. Sati, S. M. Sánchez, N. Shoeibi, A. Arora, and J. M. Corchado, “Face detection and recognition, face emotion recognition through nvidia jetson nano,” Adv. Intell. Syst. Comput., vol. 1239 AISC, no. September, pp. 177–185, 2021, doi: 10.1007/978-3-030-58356-9_18.

O. Ekundayo and S. Viriri, “Multilabel convolution neural network for facial expression recognition and ordinal intensity estimation,” PeerJ Comput. Sci., vol. 7, no. Cv, 2021, doi: 10.7717/peerj-cs.736.

Ezenwobodo and S. Samuel, “International Journal of Research Publication and Reviews,” Int. J. Res. Publ. Rev., vol. 04, no. 01, pp. 1806–1812, 2022, doi: 10.55248/gengpi.2023.4149.

Y. Nan, J. Ju, Q. Hua, H. Zhang, and B. Wang, “A-MobileNet: An approach of facial expression recognition,” Alexandria Eng. J., vol. 61, no. 6, pp. 4435–4444, 2022, doi: 10.1016/j.aej.2021.09.066.

A. Michele, V. Colin, and D. D. Santika, “Mobilenet convolutional neural networks and support vector machines for palmprint recognition,” Procedia Comput. Sci., vol. 157, pp. 110–117, 2019, doi: 10.1016/j.procs.2019.08.147.

G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017, vol. 2017-Janua, pp. 2261–2269, 2017, doi: 10.1109/CVPR.2017.243.

N. Hasan, Y. Bao, A. Shawon, and Y. Huang, “DenseNet Convolutional Neural Networks Application for Predicting COVID-19 Using CT Image,” SN Comput. Sci., vol. 2, no. 5, 2021, doi: 10.1007/s42979-021-00782-7.

S. H. Wang and Y. D. Zhang, “DenseNet-201-Based Deep Neural Network with Composite Learning Factor and Precomputation for Multiple Sclerosis Classification,” ACM Trans. Multimed. Comput. Commun. Appl., vol. 16, no. 2s, 2020, doi: 10.1145/3341095.

J. Goldberger, S. Roweis, G. Hinton, and R. Salakhutdinov, “Neighbourhood components analysis,” Adv. Neural Inf. Process. Syst., 2005.

Y. Chen and J. He, “Deep Learning-Based Emotion Detection,” J. Comput. Commun., vol. 10, no. 02, pp. 57–71, 2022, doi: 10.4236/jcc.2022.102005.

E. G. Moung, C. C. Wooi, M. M. Sufian, C. K. On, and J. A. Dargham, “Ensemble-based face expression recognition approach for image sentiment analysis,” Int. J. Electr. Comput. Eng., vol. 12, no. 3, pp. 2588–2600, 2022, doi: 10.11591/ijece.v12i3.pp2588-2600.

C. Jia, C. L. Li, and Z. Ying, “Facial expression recognition based on the ensemble learning of CNNs,” ICSPCC 2020 - IEEE Int. Conf. Signal Process. Commun. Comput. Proc., pp. 0–4, 2020, doi: 10.1109/ICSPCC50002.2020.9259543.

S. Gu, C. Xu, and B. Feng, “Facial expression recognition based on global and local feature fusion with CNNs,” 2019 IEEE Int. Conf. Signal Process. Commun. Comput. ICSPCC 2019, pp. 5–9, 2019, doi: 10.1109/ICSPCC46631.2019.8960765.