Deep Reinforcement Learning DDPG Algorithm with AM based Transferable EMS for FCHEVs

Main Article Content

Abstract

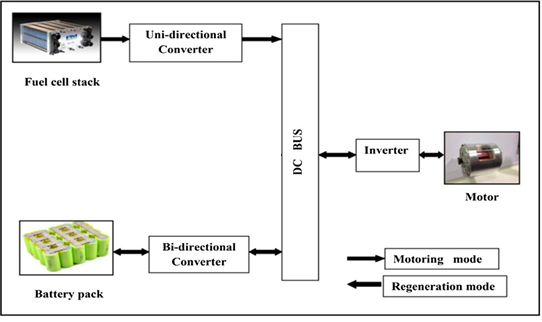

Hydrogen fuel cell is used to run fuel cell hybrid electrical vehicles (FCHEVs). These FCHEVs are more efficient than vehicles based on conventional internal combustion engines due to no tailpipe emissions. FCHEVs emit water vapor and warm air. FCHEVs are demanding fast dynamic responses during acceleration and braking. To balance dynamic responsiveness, develop hybrid electric cars with fuel cell (FC) and auxiliary energy storage source batteries. This research paper discusses the development of an energy management strategy (EMS) for power-split FC-based hybrid electric cars using an algorithm called deep deterministic policy gradient (DDPG) which is based on deep reinforcement learning (DRL). DRL-based energy management techniques lack constraint capacity, learning speed, and convergence stability. To address these limitations proposes an action masking (AM) technique to stop the DDPG-based approach from producing incorrect actions that go against the system's physical limits and prevent them from being generated. In addition, the transfer learning (TL) approach of the DDPG-based strategy was investigated in order to circumvent the need for repetitive neural network training throughout the various driving cycles. The findings demonstrated that the suggested DDPG-based approach in conjunction with the AM method and TL method overcomes the limitations of current DRL-based approaches, providing an effective energy management system for power-split FCHEVs with reduced agent training time.

Article Details

References

Heeyun L, Suk C, “Energy Management Strategy of Fuel Cell Electric Vehicles Using Model-Based Reinforcement Learning With Data-Driven Model Update”, IEEE, (2021) pp. 59244- 59254.

N.Sulaiman, M.Hannan, A. Mohamed, E. Majlan, W.Wan, “A review on EMS for fuel cell hybrid electric vehicle: Issues and challenges”, Renew Sustain Energy Rev, vol. 52,(2015), pp. 802–814.

C.Wang , M.Nehrir, “Power Management of a Stand-Alone Wind/Photovoltaic/Fuel Cell Energy System”, IEEE , vol. 23, no. 3, (2008), pp. 957-967.

Haiying Z., Tenghai Q., Shuxiao L, Chengfei Z., Xiaosong L., Hongxing C., “Autonomous Navigation with Improved Hierarchical Neural Network Based on Deep Reinforcement Learning”, Chinese Control Conference, (2019).

Runze L., Junghui C., Lei X., Hongye S., “Accelerating reinforcement learning with case-based model-assisted experience augmentation for process control”, Neural Networks vol 158, (2023), pp. 197-215.

L. Guo, Z. Li , R. Outbib, “Reinforcement Learning based EMS for Fuel Cell Hybrid Electric Vehicles”, IECON 2021 – 47th Annual Conference of the IEEE Industrial Electronics Society, Toronto, ON, Canada, (2021), pp. 1-6.

Chunhua Z, Wei L, Weimin L, Kun X, Lei P, Suk C, “A Deep RL-Based EMS for Fuel Cell Hybrid Buses”, International Journal of Precision Engineering and Manufacturing-Green Technology, (2021), pp. 885-897.

H. sun, Z. Fu, F. Tao, L. Zhu, P. Si, “Data-driven reinforcement learning-based hierarchical EMS for fuel cell/battery/ultracapacitor hybrid electric vehicles”, J. Power Sources, vol. 455, Art. no. 227964, (2020).

H. Lee, C. Kang, Y. Park, N. Kim, S.Cha, “Online data driven energy management of a hybrid electric vehicle using model-based Q-learning”, IEEE Access, vol. 8, (2020), pp. 84444–84454.

Dhawal Khem, Shailesh Panchal, Chetan Bhatt. (2023). Text Simplification Improves Text Translation from Gujarati Regional Language to English: An Experimental Study. International Journal of Intelligent Systems and Applications in Engineering, 11(2s), 316–327. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2699

Yue H, Weimin L, Kun X, Taimoor Z, Feiyan Q, Chenming L, “Energy Management Strategy for a Hybrid Electric Vehicle Based on DRL”, Applied Sciences, (2018), pp. 1-15.

Tim B, Jens K, Karl T, Robert B, “Integrating state representation learning into deep reinforcement learning”, IEEE, vol. 3, (2018), pp. 1394-1401.

Tianshu Wei, Yanzi Wang, Qi Zhu, “Deep reinforcement learning for building HVAC control”, 54th ACM/EDAC/IEEE Design Automation Conference (DAC), (2017), pp. 1-6.

Bayen, Belletti F., Haziza D., Gomes G., “Expert level control of ramp metering based on multi-task DRL”, IEEE Transactions, no. 99, (2017), pp. 1–10.

Qi X, Luo Y, Wu G, Boriboonsomsin K, Brath J, “Deep reinforcement learning-based vehicle energy efficiency autonomous learning system”, Proceedings IEEE Intelligent Vehicles Symposium , (2017), pp. 11–14.

Hiroshi Yamamoto, An Ensemble Learning Approach for Credit Risk Assessment in Banking , Machine Learning Applications Conference Proceedings, Vol 1 2021.

Tianho Z., Kanh G., Levine S., Abbeel P., “Learning deep control policies for autonomous aerial vehicles with MPC-guided policy”, Proceedings IEEE International Conference, (2016), pp. 528–535.

Mr. Rahul Sharma. (2013). Modified Golomb-Rice Algorithm for Color Image Compression. International Journal of New Practices in Management and Engineering, 2(01), 17 - 21. Retrieved from http://ijnpme.org/index.php/IJNPME/article/view/13

W. Jia, J. Li, Y. Zhao, “DQN Algorithm Based on Target Value Network Parameter Dynamic Update”, IEEE International Conference, (2021), pp. 285-28.

F. Lewis, D. Vrabie, “Reinforcement learning and adaptive dynamic programming for feedback control”, IEEE, vol. 9, no. 3, (2009), pp. 32–50.

Archie C., Zahra R., Gregor V., “Actor-critic learning for optimal building energy management with phase change materials”, Electric Power Systems Research, (2020).

Jiageng R., Changcheng W., Zhaowen L., Kai L., Bin L., Weihan L., Tongyang L., “Exclude quotes On Exclude bibliography On Exclude matches Off The application of machine learning-based EMS in a multi-mode plug-in hybrid electric vehicle part II: Deep deterministic policy gradient algorithm design for electric mode”, Energy vol. 269, (2023).

Xiaolin T., Jiaxin C., Huayan P., Teng L., A. Khajepour, “Double Deep Reinforcement Learning-Based Energy Management for a Parallel Hybrid Electric Vehicle with Engine Start-Stop Strategy”, IEEE Transactions, (2021), pp. 1376-1388.

Yogesh W., Sheetal R., Faruk K., “SoC Estimation of Battery in FCHEVs Using Reformulated Constrained Unscented Kalman Filter”, IEEE International Conference on Sustainable Technology for Power and Energy Systems (STPES),(2022), pp. 1-6.

Yuecheng Li , Hongwen He, “DRL-based Energy Management for Hybrid Electric Vehicles. Synthesis Lectures on Advances in Automotive Technology ” Springer Cham,(2022), pp. 1-123.

Chunhua Z., Dongfang Z., Yao X., Wei L., “Reinforcement learning-based EMS of fuel cell hybrid vehicles with multi-objective control”, Journal of Power Sources vol. 543, (2022).

Zekeriya E., “Reinforcement learning based energy management strategy for fuel cell hybrid vehicles”, Sabanci University, (2022), pp. 1- 56.

Xiaowei G., Teng L., Bangbei T., Xiaolin T., Jinwei Z., Wenhao T., Shufeng J., “Transfer Deep Reinforcement Learning enabled Energy Management Strategy for Hybrid Tracked Vehicle”, IEEE Access, (2020), pp. 1-11.