Abstractive Summarization with Efficient Transformer Based Approach

Main Article Content

Abstract

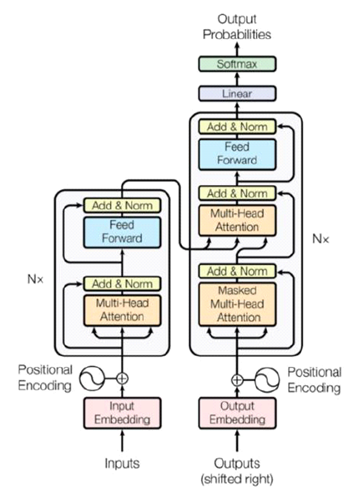

One of the most significant research areas is how to make a document smaller while keeping its essential information because of the rapid proliferation of online data. This information must be summarized in order to recover meaningful knowledge in an acceptable time. Text summarization is what it's called. Extractive and abstractive text summarization are the two types of summarization. In current years, the arena of abstractive text summarization has become increasingly popular. Abstractive Text Summarization (ATS) aims to extract the most vital content from a text corpus and condense it into a shorter text while maintaining its meaning and semantic and grammatical accuracy. Deep learning architectures have entered a new phase in natural language processing (NLP). Many studies have demonstrated the competitive performance of innovative architectures including recurrent neural network (RNN), Attention Mechanism and LSTM among others. Transformer, a recently presented model, relies on the attention process. In this paper, abstractive text summarization is accomplished using a basic Transformer model, a Transformer with a pointer generation network (PGN) and coverage mechanism, a Fastformer architecture and Fastformer with pointer generation network (PGN) and coverage mechanism. We compare these architectures after careful and thorough hyperparameter adjustment. In the experiment the standard CNN/DM dataset is used to test these architectures on the job of abstractive summarization.

Article Details

References

N. Andhale and L. A. Bewoor, “An overview of text summarization techniques”, 2016 International Conferecne on Computing Communication Control and Automation (ICCUBEA), DOI:10.1109/ICCUBEA.2016.7860024.

Madhuri P. Karnik, Dr.D.V.Kodavade, “A Discussion on Various Methods in Automatic Abstractive Text Summarization”, International conference on Mobile computing and sustainable Informatics (ICMCSI-2020).

Alexander M. Rush, Sumit Chopra, Jason Weston, “A neural attention model for abstractive sentence summarization”. Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pages 379-389, September 2015.

Sumit Chopra, Michael Auli, Alexander M. Rush, “Abstractive sentence summarization with attentive recurrent neural networks”, proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human language Technologies, , pages 93-98, June 2016.

R. Nallapati, B. Zhou, Cicero dos Santos, C. Gulcehre, B. Xiang , “Abstractive text summarization using sequence to sequence RNNs and beyond,” in Proceedings of the CoNLL-16, Berlin, Germany, August 2016.

Junyang Lin, Xu Sun, Shuming Ma, Qi Su, “Global Encoding for Abstractive Summarization”, In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (pp. 163-169), July 2018.

Shengli Song,Haitao Huang, Tongxiao Ruan, “Abstractive text summarization using LSTM-CNN based deep learning”, Multimedia Tools and Applications, vol.-78, issue 1, pp. 857-875, Jan 2018.

Piji Li, Wai Lam, Lidong Bing, Zihao Wang, “Deep recurrent generative decoder for abstractive text summarization”, Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 2091–2100, September 2017.

Tal Baumel, Matan Eyal, Michael Elhadad, “Query Focused Abstractive Summarization:Incorporating Query Relevance, Multi-Document Coverage, and Summary Length Constraints into seq2seq Models”, arXiv:1801.07704v2 [cs.CL] 25 Jan 2018.

Abu Kaisar Mohammad Masum, Sheikh Abujar , Md Ashraful Islam Talukder , A.K.M.Shahariar Azad Rabby , Syed Akhter Hossain , “Abstractive method of text summarization with sequence to sequence RNNs”, 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), 2019.

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, ?. Kaiser, and I. Polosukhin, “Attention is all you need”, 31st Conference on Neural Information Processing Systems (NIPS), 2017.

Abigail See, P. J. Liu, and C. D. Manning, “Get to the point: Summarization with pointer-generator networks”, Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, vol. 1, July 2017, pp. 1073–1083.

Qian guo, J Huang , N Xiong , P Wang (2019), “MS-Pointer Network: Abstractive Text Summary Based on Multi-Head Self-Attention”, volume 7, 2019.

Xin Liu, Liutong Xv , “Abstract summarization based on the combination of transformer and LSTM”, 2019 International Conference on Intelligent Computing, Automation and Systems(ICICAS),DOI: 10.1109/ICICAS48597.2019.00199.

Xin Liu, Liutong Xu , “A Combined Model for Extractive and Abstractive Summarization Based on Transformer Model”, DOI reference number: 10.18293/SEKE2020-069.

Ekaterina Z, Tsegaye Misikir Tashu and Tomáš Horváth, “Abstractive Text Summarization using Transfer Learning”, CEUR- WS. Org /vo1-2718, 2020.

Jon Deaton, Austin Jacobs, Kathleen Kenealy, Abigail See, “Transformers and Pointer-Generator Networks for Abstractive Summarization”, 2019.

Wen Kai, Zhou Lingyu , “Research on Text Summary Generation Based on Bidirectional Encoder Representation from Transformers”, 2nd International Conference on Information Technology and Computer Application (ITCA), 2020, DOI:10.1109/ITCA52113.2020.00074.

Jianwen J, Haiyang Z, Chenxu D, Qingjuan Z , Hao F , Zhanlin J, “Enhancements of Attention-Based Bidirectional LSTM for Hybrid Automatic Text Summarization”, 2021, DOI: 10.1109/ACCESS.2021.3110143.

Jiaming Sun, Yunli Wang, Zhoujun L, “An Improved Template Representation-basedTransformer for Abstractive Text Summarization”, 2020 International Joint Conference on Neural Networks (IJCNN), DOI:10.1109/IJCNN48605.2020.9207609.

Soheil Esmaeilzadeh , Gao Xian Peh , Angela Xu , “Neural Abstractive Text Summarization and Fake News Detection” arXiv:1904.00788v2 [cs.CL] 12 Dec 2019.

Urvashi Khandelwal , Kevin Clark, Dan Jurafsky, ?ukasz Kaiser , “Sample Efficient Text Summarization Using a Single Pre-Trained Transformer”, arXiv:1905.08836v1[cs.CL], 2019.

Vrinda Vasavada, Alexandre Bucquet , “Just News It: Abstractive Text Summarization with a Pointer-Generator Transformer”, CS224N: Natural Language Processing with Deep Learning, Stanford University, 2019.

DANG Trung Anh, Nguyen Thi Thu Trang , “Abstractive Text Summarization Using Pointer-Generator Networks with Pre-trained Word Embedding”, SoICT’19: Proceedings of the 10th International Symposium on Information and Communication Technology, Pages 473-478, December 2019.

Hangbo Bao, Li Dong, Wenhui Wang, Nan Yang, Furu Wei , “s2s-ft: Fine-Tuning Pretrained Transformer Encoders for Sequence-to-Sequence Learning”, arXiv:2110.13640v1 [cs.CL],26 Oct 2021.

Sandeep Subramanian, Raymond Li, Jonathan Pilault, Christopher Pal (2020), “On Extractive and Abstractive Neural Document Summarization with Transformer Language Models”, 2020, Conference on Empirical Methods in Natural Language Processing, Nov. 16-20.

Tian Cai, Mengjun Shen, Huailiang Peng, Lei Jiang, Qiong Dai, “Improving Transformer with Sequential Context Representations for Abstractive Text Summarization”, Natural Language Processing and Chinese Computing: 8th CCF International Conference, NLPCC 2019. Pages 512-524.

Nima Sanjabi (2018). Abstractive text summarization with attention-based mechanism, Master’s thesis, Universitat Politècnica de Catalunya, July 2018.

Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly, “Pointer networks”, NIPS’15: Proceedings of the 28th International Conference on Neural Information Processing Systems-Volume 2, Pages 2692-2700, December 2015.

Siddhant Porwal, Laxmi Bewoor, Vivek Deshpande, “Transformer Based Implementation for Automatic Book Summarization”, International Journal of Intelligent Systems and applications in engineering (IJISAE), 10(3s), 123-128, 2022.

Prottyee Howlader, Prapti Paul, Meghana Madavi, Dr. Laxmi Bewoor, Dr.V.S.Deshpande, International Journal on Recent and Innovation Trends in Computing and Communication, Volume: 10 Issue: 1s, 2022.

Chuhan Wu, Fangzhao Wu, Tao Qi, Yongfeng Huang, Xing Xie , “Fastformer: Additive Attention Can Be All You Need”, arXiv:2108.09084v6 [cs.CL] 5 Sep 2021.