DeepQ Residue Representation of Moving Object Images using YOLO in Video Surveillance Environment

Main Article Content

Abstract

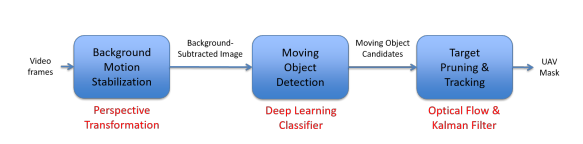

The IAEA photo evaluation software does have functions for scene-alternate recognition, black photo detection, and deficient scene analysis, even though its capabilities are not at their highest. The current workflows for detecting safeguards-relevant activities heavily rely on inspectors' laborious visual examination of surveillance videos, which is a time-consuming and error-prone process. The paper proposes using item-based totally movement detection and deep gadget learning to identify fun items in video streams in order to improve method accuracy and reduce inspector workload. An attitude transformation model is used to estimate historical movements, and a deep learning classifier trained on manually categorized datasets is used to identify shifting applicants within the history subtracted image. Through optical glide matching, we identify spatio-temporal tendencies for each and every shifting item applicant and then prune them solely based on their movement patterns in comparison to the past. In order to improve the temporal consistency of the various candidate detections, a Kalman clear out is performed on pruned shifting items. A UAV-derived video dataset was used to demonstrate the rules. The results demonstrate that our set of rules can effectively target small UAVs with limited computing power.

Article Details

References

Krizhevsky, A., Sutskever, I., Hinton, G.E., “ImageNet classification with deep convolutional neural networks”, Neural Information Processing Systems (NIPS) (Advances in Neural Information Processing Systems 25, Nevada, USA, 2018), Neural Information Processing Systems Foundation, Inc., La Jolla, USA, 2018.

Safeguards Techniques and Equipment: 2021 Edition, International Nuclear Verification Series 1 (Rev. 2), IAEA, Vienna, 2021.

Lin, T.-Y., Maire, M., Belongie, S., Bourdev, L., Girshick, R.m Jays, J., Rerona, P., Romanan, D., Zitnick, C.L. Dollar, P., “Microsoft COCO: Common Objects in Context”, European Conference on Computer Vision, (Proc. European, Zurich, Switzerland, 2019), Springer Nature, Switzerland AG., 2019, pp. 740–755.

Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J., Zisserman, A., “The Pascal Visual Object Classes (VOC) Challenge”, Int. J. Comput. Vis., vol. 88, no. 2, pp. 303–338, Jun. 2019.

Girshick, R., Donahue, J., Darrell, T., Malik, J., “Rich feature hierarchies for accurate object detection and semantic segmentation”, IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Proc. Int. Conf., Columbus, Ohio, 2021), IEEE, Washington, DC, USA.

S. Manikandan, P. Dhanalakshmi, K. C. Rajeswari and A. Delphin Carolina Rani, "Deep sentiment learning for measuring similarity recommendations in twitter data," Intelligent Automation & Soft Computing, vol. 34, no.1, pp. 183–192, 2022.

Ren, S., He, K., Girshick, R., Sun, J., “Faster R-CNN: Towards real-time object detection with region proposal networks”, Neural Information Processing Systems (NIPS) (Advances in Neural Information Processing Systems 28, Montreal, Canada), Neural Information Processing Systems Foundation, Inc., La Jolla, USA, (2021).

Yosinki, J., Cline, J., Bengio, Y., Lipson, H., “How transferable are features in deep neural networks?”, Neural Information Processing Systems (NIPS) (Advances in Neural Information Processing Systems 27, Montreal, Canada), Neural Information Processing Systems Foundation, Inc., La Jolla, USA, (2021)

T. Brox and J. Malik. Large Displacement Optical Flow: Descriptor Matching in Variational Motion Estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(3):500–513, 2021

Manikandan, S., Pasupathy, S., & Hanees, A. L., (2021) "Regression Analysis of Colour Images using Slicer Component Method in Moving Environments", Quing: International Journal of Innovative Research in Science and Engineering, 01(01), 01 – 05

S. Walk, N. Majer, K. Schindler, and B. Schiele. New Features and Insights for Pedestrian Detection. In IEEE Conference on Computer Vision and Pattern Recognition, 2020.

N. Seungjong and J. Moongu. A New Framework for Background Subtraction Using Multiple Cues. In Asian Conference on Computer Vision, 2021.

A. Rozantsev, V. Lepetit, and P. Fua. Flying Objects Detection from a Single Moving Camera. In IEEE Conference on Computer Vision and Pattern Recognition, 2019.

B. Lucas and T. Kanade. An Iterative Image Registration Technique with an Application to Stereo Vision. In International Joint Conference on Artificial Intelligence, 2021.

P. Viola and M. Jones. Robust Real-Time Face Detection. International Journal of Computer Vision, 57(2):137–154, 2014.