Smart Multi-Model Emotion Recognition System with Deep learning

Main Article Content

Abstract

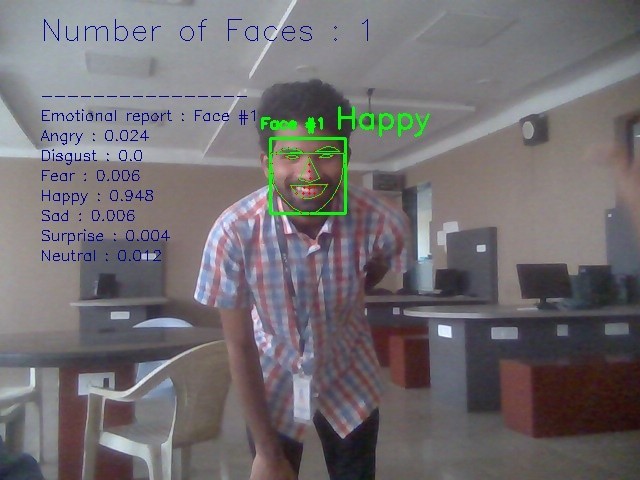

Emotion recognition is added a new dimension to the sentiment analysis. This paper presents a multi-modal human emotion recognition web application by considering of three traits includes speech, text, facial expressions, to extract and analyze emotions of people who are giving interviews. Now a days there is a rapid development of Machine Learning, Artificial Intelligence and deep learning, this emotion recognition is getting more attention from researchers. These machines are said to be intelligent only if they are able to do human recognition or sentiment analysis. Emotion recognition helps in spam call detection, blackmailing calls, customer services, lie detectors, audience engagement, suspicious behavior. In this paper focus on facial expression analysis is carried out by using deep learning approaches with speech signals and input text.

Article Details

References

AasthaJoshi, National Conference on August 2013, "Speech Emotion Recognition Using Combined Features of HMM & SVM Algorithm."

AnkurSapra, Nikhil Panwar, and SohanPanwar, "Emotion Recognition from Speech," Volume 3, Issue 2, pp. 341-345, February 2013.

Anagnostopoulos, C. N., Iliou, T., and Giannoukos, I. Anagnostopoulos, C. N., Iliou, T., and Giannou A survey from 2000 to 201 1[J] on features and classifiers for emotion recognition from speech. 155-177 in Artificial Intelligence Review, vol. 43, no. 2, 2015. (this is in the NETHERLANDS).

BjörnSchuller, Manfred Lang, and Gerhard Rigoll, "Automatic Emotion Recognition by Speech Signal," National Journal, Volume 3, Issue 2, pp. 342-347, 2013.

Bourlard, H., Konig, Y., Morgan, N., and others. [C]/ / 1996 8th European Signal Processing Conference. For hybrid HMM/ANN speech recognition systems, a new training strategy has been developed. IEEE, Trieste, Italy, 1996:1-4. (in Italy).

Chang-Hyun Park and Kwee-Bo Sim, "Emotion Recognition and Acoustic Analysis from Speech Signal," in Chang-Hyun Park and Kwee-Bo Sim, "Emotion Recognition and Acoustic Analysis from Speech Signal," in Chang-Hyun Park and K Q2003 IEEE, International Journal on 2003, volume 0-7803-7898-9/03.

Chao Wang and Stephanie Seneff's paper "Robust Pitch Tracking For Prosodic Modeling In Telephone Speech" was presented at the 2003 National Conference on "Big Data Analysis and Robotics”

Chiu Ying Lay and Ng Hian James, "Gender Classification from Speech," in Chiu Ying Lay and Ng Hian James, "Gender Classification from Speech," in Chiu Ying Lay and N (2005).

“Evaluation of expression recognition techniques," by Ira Cohen and colleagues. Retrieval of images and videos. 184-195 in Springer Berlin Heidelberg, 2003.

IEEE Conference on 2004, Margarita Kotti and Constantine Kotropoulos, "Gender Classification In Two Emotional Speech Databases.”

In September 2001, Sony CSL Paris published "The creation and recognition of emotions in speech: characteristics and algorithms.”

Jason Weston, "Support Vector Machine and Statistical Learning Theory," International Journal, pp. 891-894, August 2011.

M. El Ayaadi, F. Karrae, and M. S. Kamal are the first to mention M. El Ayaadi, F. Karrae, and M. S. Kamal. Pattern Recognit., vol. 44, no. 3, pp. 572–587, 2011. "Survey on emotion recognitions: Features, classification method, and database”.

Mohammed E. Hoque1, Mohammed Yeasin1, and Max M. Louwerse2, "Robust Recognition of Emotion from Speech," International Journal, Volume 2, pp. 221-225,October 2011.

Nobuo Sato and Yasunari Ohbuchi, "Emotion Recognition Using MFCCs," Information and Media Technologies 2(3):835-848 (2007), published from Journal of Natural Language Processing 14(4): 83-96. (2007).

P. Ekman and W. Friesen, Consulting Psychologists Press, 1978, Facial Action Coding System: A Technique for the Measurement of Facial Movement.

“Recognizing Emotion In Speech Using Neural Networks," IEEE Conference on "Neural Networks and Emotion Recognition" in 2013, Keshi Dai1, Harriet J. Fell1, and Joel MacAuslan2.

Representing facial pictures for emotion classification, Padgett, C., and Cottrell, G. Advances in Neural Information.

S.Wu, F. Li, and P. Zhang. DNN-based Emotional Recognition based on Weighted Feature Fusion for Variable-length Speech [C]/2019 15th International Conference on Wireless Communications and Mobile Computing (IWCMC). 674-679 in IEEE, 2019. (in the United States).

T.L. Nwe', S W Foo, and L C De Silva, "Detection of Stress and Emotion in Speech Using Traditional And FFT Based Log Energy Features," in T L Nwe', S W Foo, and L C De Silva, "Detection of Stress and Emotion in Speech Using Traditional And FFT Based Log Energy Features," in T L Nwe', S 0-7803-8185-8/03 IEEE 0-7803-8185-8/03 IEEE 0-7803-8185-8/03 ( 200).

T.L. Nwe, S. W. Foo, and L. C. De Silva. Hidden Markov models for speech emotion recognition[J] 603-623 in Speech Communication, vol. 41, no. 4, 2003. (this is in the NETHERLANDS).