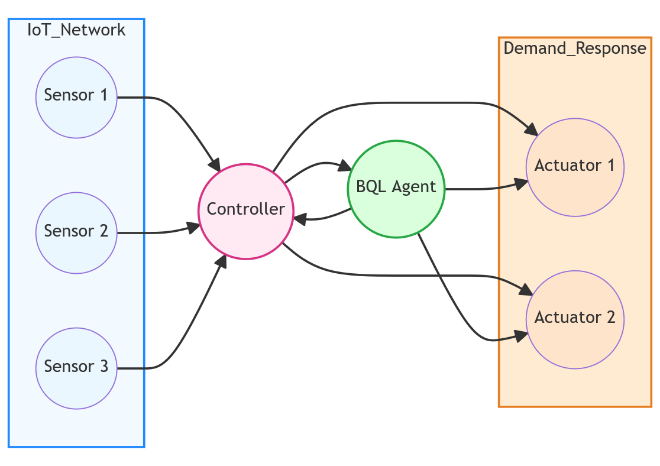

BQL-DRS: A Novel Balanced Q-Learning Based Demand Response System for IoT based Smart Grids

Main Article Content

Abstract

The modernization of electricity networks and the integration of renewable energy resources in Internet of Things (IoT) based smart grids have led to increased variability in market prices, necessitating effective demand response (DR) strategies. To address this challenge, this paper proposes a novel Balanced Q-Learning based Demand Response System (BQL-DRS) that combines both optimistic and pessimistic targets in the Q-learning algorithm to achieve a balanced decision-making process in IoT based smart grids. It optimizes DR actions by efficiently managing consumer demand in real-time, considering IoT data from grid conditions, energy prices, and consumer preferences. The significance of the BQL-DRS lies in its ability to handle dynamic and uncertain IoT based grid environments, enabling it to make informed and cautious decisions while pursuing energy efficiency and cost-effectiveness. By effectively addressing both pessimistic and optimistic scenarios, the BQL-DRS ensures grid stability, load balancing, and substantial cost savings compared to representative models.

Article Details

References

] Li, W. T., Yuen, C., Hassan, N. U., Tushar, W., Wen, C. K., Wood, K. L., ... & Liu, X. (2015). Demand response management for residential smart grid: From theory to practice. IEEE Access, 3, 2431-2440.

] Pawar, P. (2019). Design and development of advanced smart energy management system integrated with IoT framework in smart grid environment. Journal of Energy Storage, 25, 100846.

] Hassanniakheibari, M., Hosseini, S. H., & Soleymani, S. (2020). Demand response programs maximum participation aiming to reduce negative effects on distribution networks. International Transactions on Electrical Energy Systems, 30(8), e12444.

] Sediqi, M. M., Nakadomari, A., Mikhaylov, A., Krishnan, N., Lotfy, M. E., Yona, A., & Senjyu, T. (2022). Impact of time-of-use demand response program Energies, 15(1), 296.

] Yusuf, J., Hasan, A. J., & Ula, S. (2021, February). Impacts analysis & field implementation of plug-in electric vehicles participation in demand response and critical peak pricing for commercial buildings. In 2021 IEEE Texas Power and Energy Conference (TPEC) (pp. 1-6). IEEE.

] Wang, X., & Tang, W. (2019, October). Designing multistep peak time rebate programs for curtailment service providers. In 2019 North American Power Symposium (NAPS) (pp. 1-6). IEEE.

] Mansoor, C.M.M., Vishnupriya, G., Anand, A., ...Kumaran, G., Samuthira Pandi, V, “A Novel Framework on QoS in IoT Applications for Improvising Adaptability and Distributiveness”, International Conference on Computer Communication and Informatics, ICCCI 2023, 2023.

] Samuthira Pandi, V., Singh, M., Grover, A., Malhotra, J., Singh, S, “Performance analysis of 400 Gbit/s hybrid space division multiplexing-polarization division multiplexing-coherent detection-orthogonal frequency division multiplexing-based free-space optics transmission system”, International Journal of Communication Systems, 2022, 35(16), e5310.

] Wen, L., Zhou, K., Li, J., & Wang, S. (2020). Modified deep learning and reinforcement learning for an incentive-based demand response model. Energy, 205, 118019.

] R, G. et al. (2022). “Optimization of Solar Hybrid Power Generation Using Conductance-Fuzzy Dual-Mode Control Method” International Journal of Photoenergy, Volume 2022, Article ID 7756261, 10 Pages, 2022 https://doi.org/10.1155/2022/7756261.

] Karimpanal, T. G., Le, H., Abdolshah, M., Rana, S., Gupta, S., Tran, T., & Venkatesh, S. (2021). Balanced q-learning: Combining the influence of optimistic and pessimistic targets. arXiv preprint arXiv:2111.02787.

] Vázquez-Canteli, J. R., & Nagy, Z. (2019). Reinforcement learning for demand response: A review of algorithms and modeling techniques. Applied energy, 235, 1072-1089.

] R, G. et al. (2022). “A Novel Approach in Hybrid Energy Storage System for Maximizing Solar PV Energy Penetration in Microgrid”, International Journal of Photoenergy, Volume 2022, Article ID 3559837, 7 pages, https://doi.org/10.1155/2022/3559837.

] Yan, X., Ozturk, Y., Hu, Z., & Song, Y. (2018). A review on price-driven residential demand response. Renewable and Sustainable Energy Reviews, 96, 411-419.

] Pallonetto, F., De Rosa, M., Milano, F., & Finn, D. P. (2019). Demand response algorithms for smart-grid ready residential buildings using machine learning models. Applied energy, 239, 1265-1282.

] Lu, T., Wang, Z., Wang, J., Ai, Q., & Wang, C. (2018). A data-driven Stackelberg market strategy for demand response-enabled distribution systems. IEEE Transactions on Smart Grid, 10(3), 2345-2357.

] Ali, S. S., & Choi, B. J. (2020). State-of-the-art artificial intelligence techniques for distributed smart grids: A review. Electronics, 9(6), 1030.

] Senthilkumar, S., Samuthira Pandi, V., Sripriya, T., Pragadish, N (2023), “Design of recustomize finite impulse response filter using truncation based scalable rounding approximate multiplier and error reduced carry prediction approximate adder for image processing application”, Concurrency and Computation: Practice and Experience, 35(8), e7629.

] Oh, S., Kong, J., Yang, Y., Jung, J., & Lee, C. H. (2023). A multi-use framework of energy storage systems using reinforcement learning for both price-based and incentive-based demand response programs. International Journal of Electrical Power & Energy Systems, 144, 108519.

] Li, Z., Sun, Z., Meng, Q., Wang, Y., & Li, Y. (2022). Reinforcement learning of room temperature set-point of thermal storage air-conditioning system with demand response. Energy and Buildings, 259, 111903.