A Comparative Analysis for Filter-Based Feature Selection Techniques with Tree-based Classification

Main Article Content

Abstract

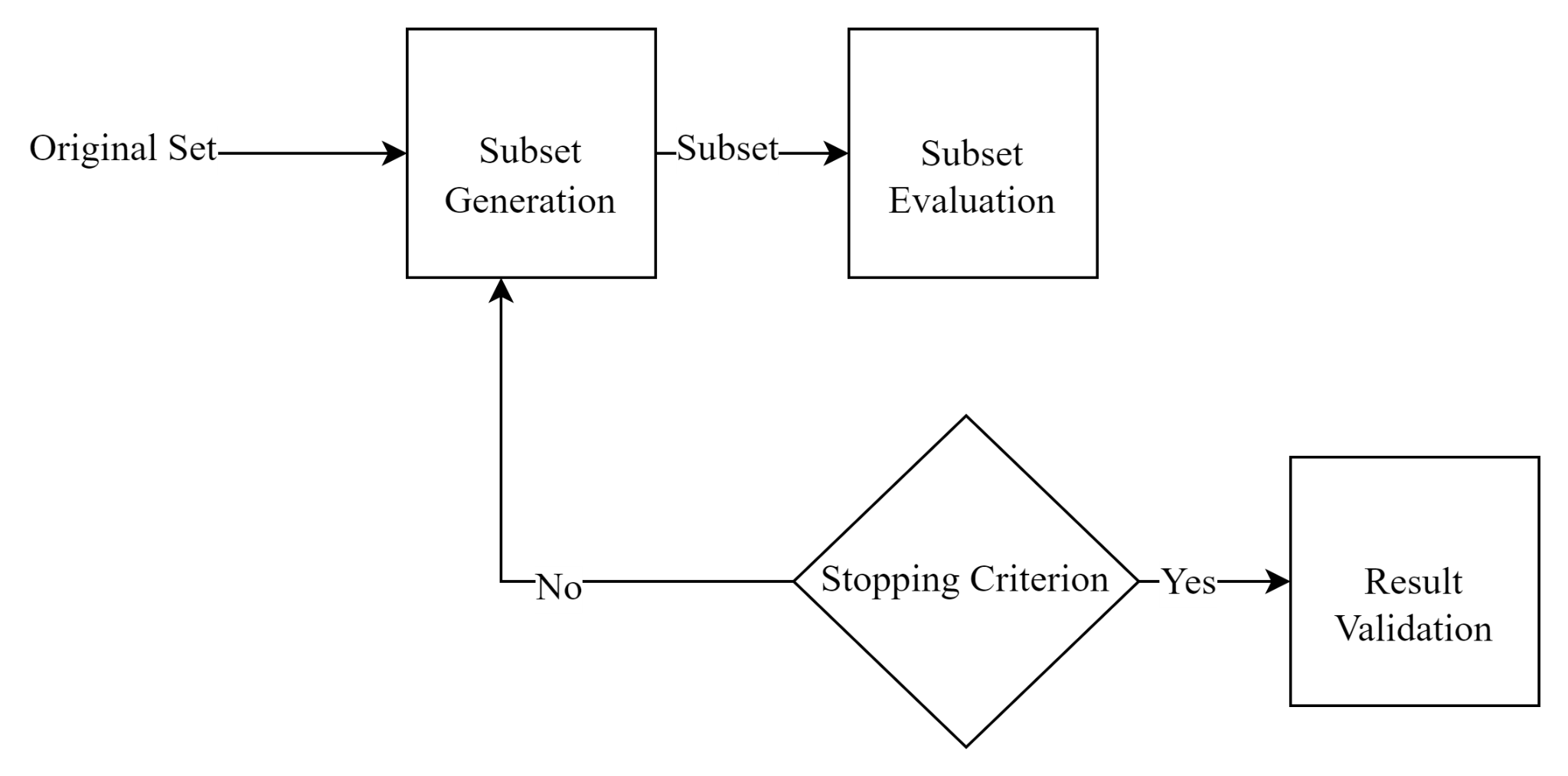

The selection of features is crucial as an essential pre-processing method, used in the area of research as Data Mining, Text mining, and Image Processing. Raw datasets for machine learning, comprise a combination of multidimensional attributes which have a huge amount of size. They are used for making predictions. If these datasets are used for classification, due to the majority of the presence of features that are inconsistent and redundant, it occupies more resources according to time and produces incorrect results and effects on the classification. With the intention of improving the efficiency and performance of the classification, these features have to be eliminated. A variety of feature subset selection methods had been presented to find and eliminate as many redundant and useless features as feasible. A comparative analysis for filter-based feature selection techniques with tree-based classification is done in this research work. Several feature selection techniques and classifiers are applied to different datasets using the Weka Tool. In this comparative analysis, we evaluated the performance of six different feature selection techniques and their effects on decision tree classifiers using 10-fold cross-validation on three datasets. After the analysis of the result, It has been found that the feature selection method ChiSquaredAttributeEval + Ranker search with Random Forest classifier beats other methods for effective and efficient evaluation and it is applicable to numerous real datasets in several application domains

Article Details

References

Liu Huan and Motoda Hiroshi, “Computational Methods of Feature Selection,” Comput. Methods Featur. Sel., vol. 16, pp. 257–274, 2007.

2. M. A. Hall, “Correlation-based Feature Selection for Machine Learning,” no. April, 1999.

R. Kohavi and G. H. John, “Wrappers for feature subset selection,” Artif. Intell., vol. 97, no. 1–2, pp. 273–324, 1997, doi: 10.1016/s0004-3702(97)00043-x.

I. Iguyon and A. Elisseeff, “An introduction to variable and feature selection,” J. Mach. Learn. Res., vol. 3, pp. 1157–1182, 2003.

P. M. Narendra and K. Fukunaga, “A Branch and Bound Algorithm for Feature Subset Selection,” IEEE Trans. Comput., vol. C–26, no. 9, pp. 917–922, 1977, doi: 10.1109/TC.1977.1674939.

G. Chandrashekar and F. Sahin, “A survey on feature selection methods,” Comput. Electr. Eng., vol. 40, no. 1, pp. 16–28, 2014, doi: 10.1016/j.compeleceng.2013.11.024.

G. D.E., “Genetic algorithms in search, optimization, and machine learning,” Mach. Learn. Reading, Mass, Addison-Wesley Pub. Co, vol. 19, no. SUPPL. 2, pp. 117–119, 1998.

P. Pudil, J. Novovi?ová, and J. Kittler, “Floating search methods in feature selection,” Pattern Recognit. Lett., vol. 15, no. 11, pp. 1119–1125, 1994, doi: 10.1016/0167-8655(94)90127-9.

C. Radhiya Devi, S. K. Jayanthi. (2023). DCNMAF: Dilated Convolution Neural Network Model with Mixed Activation Functions for Image De-Noising. International Journal of Intelligent Systems and Applications in Engineering, 11(4s), 552–557. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2725

J. Reunanen, “Overfitting in making comparisons between variable selection methods,” J. Mach. Learn. Res., vol. 3, pp. 1371–1382, 2003.

A. E. Isabelle Guyon, “An Introduction to Variable and Feature Selection,” Procedia Comput. Sci., vol. 94, pp. 465–472, 2016.

P. (Institute for the S. of L. and E. Langley, “Selection of Relevant Features in Machine Learning,” Proc. AAAI Fall Symp. Relev., pp. 140–144, 1994.

A. L. Blum and P. Langley, “Selection of relevant features and examples in machine learning,” Artif. Intell., vol. 97, no. 1–2, pp. 245–271, 1997, doi: 10.1016/s0004-3702(97)00063-5.

I. M. El-hasnony, H. M. El Bakry, and A. A. Saleh, “Comparative Study among Data Reduction Techniques over Classification Accuracy,” vol. 122, no. 2, pp. 8–15, 2015.

R. Kohavi and G. H. John, “Wrappers for feature subset selection,” Artif. Intell., vol. 97, no. 1–2, pp. 273–324, Dec. 1997, doi: 10.1016/S0004-3702(97)00043-X.

N. Kwak and C. H. Choi, “Input feature selection for classification problems,” IEEE Trans. Neural Networks, vol. 13, no. 1, pp. 143–159, 2002, doi: 10.1109/72.977291.

S. Feedback and A. This, “Feature Selection and Classification Methods for Decision Making?: A Comparative Analysis,” no. 63, 2015.

A. G. Karegowda, A. S. Manjunath, G. Ratio, and C. F. Evaluation, “Comparative study of Attribute Selection Using Gain Ratio,” Int. J. Inf. Technol. Knowl. Knowl. Manag., vol. 2, no. 2, pp. 271–277, 2010, Online]. Available: https://pdfs.semanticscholar.org/3555/1bc9ec8b6ee3c97c524f9c9ceee798c2026e.pdf%0Ahttp://csjournals.com/IJITKM/PDF 3-1/19.pdf.

Z. Zhao, L. Wang, H. Liu, and J. Ye, “On similarity preserving feature selection,” IEEE Trans. Knowl. Data Eng., vol. 25, no. 3, pp. 619–632, 2013, doi: 10.1109/TKDE.2011.222.

H. Peng, F. Long, and C. Ding, “Feature selection based on mutual information: Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 27, no. 8, pp. 1226–1238, 2005, doi: 10.1109/TPAMI.2005.159.

O. Osanaiye, H. Cai, K. K. R. Choo, A. Dehghantanha, Z. Xu, and M. Dlodlo, “Ensemble-based multi-filter feature selection method for DDoS detection in cloud computing,” Eurasip J. Wirel. Commun. Netw., vol. 2016, no. 1, 2016, doi: 10.1186/s13638-016-0623-3.

E. Ileberi, Y. Sun, and Z. Wang, “A machine learning based credit card fraud detection using the GA algorithm for feature selection,” J. Big Data, vol. 9, no. 1, 2022, doi: 10.1186/s40537-022-00573-8.

bin Saion, M. P. . (2021). Simulating Leakage Impact on Steel Industrial System Functionality. International Journal of New Practices in Management and Engineering, 10(03), 12–15. https://doi.org/10.17762/ijnpme.v10i03.129

M. Dash and H. Liu, “Feature selection for classification,” Intell. Data Anal., vol. 1, no. 3, pp. 131–156, 1997, doi: 10.3233/IDA-1997-1302.

A. M. M. J. AG Karegowda, “Comparative study of attribute selection using gain ratio and correlation based feature selection,” Int J Inf. Technol Knowl Manag, vol. 2, no. 2, pp. 271–277, 2010.

H. Liu, X. Wu, and S. Zhang, “A new supervised feature selection method for pattern classification,” Comput Intell, vol. 30, no. 2, pp. 342–361, 2014, doi: 10.1111/j.1467-8640.2012.00465.x.

Y. Saeys, I. Inza, and P. Larrañaga, “A review of feature selection techniques in bioinformatics,” Bioinformatics, vol. 23, no. 19, pp. 2507–2517, 2007, doi: 10.1093/bioinformatics/btm344.

R. Martín, R. Aler, and I. M. Galván, “A filter attribute selection method based on local reliable information,” Appl. Intell. 2017 481, vol. 48, no. 1, pp. 35–45, Jun. 2017, doi: 10.1007/S10489-017-0959-3.

M. Hall, E. Frank, G. Holmes, B. Pfahringer, P. Reutemann, and I. H. Witten, “The weka data mining software: an update,” ACM SIGKDD Explor. Newsl, vol. 11, no. 1, pp. 10–18, Nov. 2009, doi: 10.1145/1656274.1656278.

Anthony Thompson, Anthony Walker, Luis Pérez , Luis Gonzalez, Andrés González. Machine Learning-based Recommender Systems for Educational Resources. Kuwait Journal of Machine Learning, 2(2). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/181

A. G. Karegowda, A. S. Manjunath, G. Ratio, and C. F. Evaluation, “Comparative study of Attribute Selection Using Gain Ratio,” Int. J. Inf. Technol. Knowl. Knowl. Manag., vol. 2, no. 2, pp. 271–277, 2010.

Pekka Koskinen, Pieter van der Meer, Michael Steiner, Thomas Keller, Marco Bianchi. Automated Feedback Systems for Programming Assignments using Machine Learning. Kuwait Journal of Machine Learning, 2(2). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/190

A. G. Karegowda and M. A. Jayaram, “Cascading GA & CFS for feature subset selection in medical data mining,” 2009 IEEE Int. Adv. Comput. Conf. IACC 2009, pp. 1428–1431, 2009, doi: 10.1109/IADCC.2009.4809226.

https://archive.ics.uci.edu/ml/datasets/Statlog+(Vehicle+Silhouettes)

https://archive.ics.uci.edu/ml/datasets/congressional+voting+records

https://archive.ics.uci.edu/ml/datasets/statlog+(german+credit+data)