Critical Analysis on Multimodal Emotion Recognition in Meeting the Requirements for Next Generation Human Computer Interactions

Main Article Content

Abstract

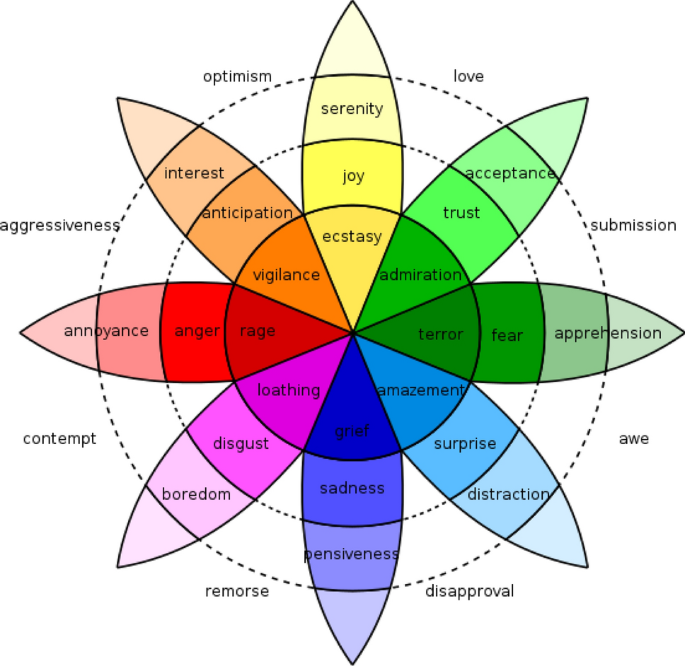

Emotion recognition is the gap in today’s Human Computer Interaction (HCI). These systems lack the ability to effectively recognize, express and feel emotion limits in their human interaction. They still lack the better sensitivity to human emotions. Multi modal emotion recognition attempts to addresses this gap by measuring emotional state from gestures, facial expressions, acoustic characteristics, textual expressions. Multi modal data acquired from video, audio, sensors etc. are combined using various techniques to classify basis human emotions like happiness, joy, neutrality, surprise, sadness, disgust, fear, anger etc. This work presents a critical analysis of multi modal emotion recognition approaches in meeting the requirements of next generation human computer interactions. The study first explores and defines the requirements of next generation human computer interactions and critically analyzes the existing multi modal emotion recognition approaches in addressing those requirements.

Article Details

References

Mehrabian, A., Ferris, S.R.: Inference of attitudes from nonverbal communication in two channels. J. Consult. Psychol. 31(3), 248 (1967)

Knapp ML, Hall JA. Nonverbal Communication in Human Interaction. 6th. Belmont, CA: Wadsworth; 2005

Mood Ring Monitors Your State of Mind, Chicago Tribune, 8 October 1975, at C1: Ring Buyers Warm Up to Quartz Jewelry That Is Said to Reflect Their Emotions. The Wall Street Journal, 14 October 1975, at p. 16; and “A Ring Around the Mood Market”, The Washington Post, 24 November 1975, at B9

Picard, R.W., Vyzas, E., Healey, J.: Toward machine emotional intelligence: analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 23(10), 1175–1191 (2001)

Garcia-Garcia, Jose & Penichet, Victor & Lozano, María. (2017). Emotion detection: a technology review. 1-8. 10.1145/3123818.3123852.

Medhat, W., Hassan, A., Korashy, H.: Sentiment analysis algorithms and applications: a survey. Ain Shams Eng. J. 5(4), 1093–1113 (2014)

Ravi, K., Ravi, V.: A survey on opinion mining and sentiment analysis: tasks, approaches and applications. Knowl.-Based Syst. 89, 14–46 (2015)

Zucco, C., Calabrese, B., Agapito, G., Guzzi, P.H., Cannataro, M.: Sentiment analysis for mining texts and social networks data: Methods and tools. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, pp. e1333 (2019)

Ekman, P., Wallace, V.: Unmasking the Face. Malor Book, Cambridge (2003)

Friesen, W.V., Ekman, P.: Emfacs-7: Emotional facial action coding system. Unpublished manuscript, University of California at San Francisco

Mozziconacci, S.J.L.: Modeling emotion and attitude in speech by means of perceptually based parameter values. User Modeling and User-Adapted Interaction 11(4), 297–326 (2001)

Murray, I.R., Arnott, J.L.: Toward the simulation of emotion in synthetic speech: a review of the literature on human vocal emotion. J. Acoust. Soc. Am. 93(2), 1097–1108 (1993)

Erika H Siegel, Molly K Sands, Wim Van den Noortgate, Paul Condon, Yale Chang, Jennifer Dy, Karen S Quigley, and Lisa Feldman Barrett. 2018. Emotion fingerprints or emotion populations? A meta-analytic investigation of autonomic features of emotion categories. Psychological bulletin 144, 4 (2018), 343

Sylvia D Kreibig. 2010. Autonomic nervous system activity in emotion: A review. Biological psychology 84, 3 (2010), 394–421.

Shu, L., et al.: A review of emotion recognition using physiological signals. Sensors (Basel) 18(7), 2074 ,2018

Kessous, L., Castellano, G. and Caridakis, G. 2010. Multimodal emotion recognition in speech-based interaction using facial expression, body gesture and acoustic analysis. Journal on Multimodal User Interfaces, 3, 33-48.

Paleari, M., Benmokhtar, R. and Huet, B. 2009. Evidence theory-based multimodal emotion recognition. In Proceedings of Proceedings of the 15th International Multimedia Modeling Conference (MMM '09) (Chongqing, China, January 6-8, 2009).Springer-Verlag, Berlin, Heidelberg, 435-446.

Wöllmer, M., Metallinou, A., Eyben, F., Schuller, B. and Narayanan, S.S. 2010. Context-sensitive multimodal emotion recognition from speech and facial expression using bidirectional LSTM modeling. In Proceedings of Proceedings of the 11th Annual Conference of the International Speech Communication Association (INTERSPEECH 2010) (Makuhari, Japan, September 26-30, 2010). 2362-2365.

Lin, J., Wu, C. and Wei, W. 2012. Error Weighted SemiCoupled Hidden Markov Model for Audio-Visual Emotion Recognition. IEEE Transactions on Multimedia, 14, 142 - 156.

Jiang, D., Cui, Y., Zhang, X., Fan, P., Ganzalez, I. and Sahli, H. 2011. Audio visual emotion recognition based on triplestream dynamic bayesian network models. In Proceedings of Fourth International Conference on Affective Computing and Intelligent Interaction (Memphis TN, October 9-12, 2011).Springer-Verlag, Berlin Heidelberg, 609-618

Wei, W., Jia, Q. X., Feng, Y. L., Chen, G., and Chu, M. (2020). Multi-modal facial expression feature based on deep-neural networks. J. Multimod. User Interfaces 14, 17–23

Zhang, J. H., Yin, Z., Cheng, P., and Nichele, S. (2020). Emotion recognition using multi-modal data and machine learning techniques: a tutorial and review. Inform. Fus. 59, 103–126.

Mou, W., Gunes, H., and Patras, I. (2019). Alone versus in-a-group: a multi-modal framework for automatic affect recognition. ACM Trans. Multimed. Comput. Commun. Appl. 15, 1–23.

Zhao, S. C., Gholaminejad, A., Ding, G., Gao, Y., Han, J. G., and Keutzer, K. (2019). Personalized emotion recognition by personality-aware high-order learning of physiological signals. ACM Trans. Multimed. Comput. Commun. Appl. 15, 1–18

Huddar, M. G., Sannakki, S. S., and Rajpurohit, V. S. (2020). Multi-level context extraction and attention-based contextual inter-modal fusion for multimodal sentiment analysis and emotion classification. Int. J. Multimed. Inform. Retriev. 9, 103–112.

Lovejit, S., Sarbjeet, S., and Naveen, A. (2019). Improved TOPSIS method for peak frame selection in audio-video human emotion recognition. Multimed. Tools Appl. 78, 6277–6308

Poria, Soujanya & Cambria, Erik & Bajpai, Rajiv & Hussain, Amir. (2017). A Review of Affective Computing: From Unimodal Analysis to Multimodal Fusion. Information Fusion. 37. 10.1016/j.inffus.2017.02.003.

X. Gu, Y. Shen and J. Xu, "Multimodal Emotion Recognition in Deep Learning:a Survey," in 2021 International Conference on Culture-oriented Science & Technology (ICCST), Beijing, China, 2021 pp. 77-82.

Sharma, G., & Dhall, A. (2021). A survey on automatic multimodal emotion recognition in the wild. In G. Phillips-Wren, A. Esposito, & L. C. Jain (Eds.), Advances in Data Science: Methodologies and Applications (pp. 35-64). (Intelligent Systems Reference Library; Vol. 189). Springer

Zhang, Tao; Tan, Zhenhua (2021): Deep Emotion Recognition using Facial, Speech and Textual Cues: A Survey. TechRxiv

Devaram, Sarada. (2020). Empathic Chatbot: Emotional Intelligence for Mental Health Well-being. 10.13140/RG.2.2.16077.46564.

Kapil Sharma, Rajiv Khosla, Yogesh Kumar. (2023). Application of Morgan and Krejcie & Chi-Square Test with Operational Errands Approach for Measuring Customers’ Attitude & Perceived Risks Towards Online Buying. International Journal of Intelligent Systems and Applications in Engineering, 11(3s), 280–285. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2685

Frank, M., Herbasz, M., Sinuk, K., Keller, A., and Nolan, C. (2009). “I see how you feel: training laypeople and professionals to recognize fleeting emotions,” in The Annual Meeting of the International Communication Association (New York City, NY: Sheraton New York.

Ekman, P., and Friesen, W. V. (1969). Nonverbal leakage and clues to deception. Psychiatry 32, 88–106.

Stephan, Hamann, , , Turhan, and Canli, “Individual differences in emotion processing,” Current Opinion in Neurobiology, 2004

U. Hess, C. Blaison, and K. Kafetsios, “Judging facial emotion expressions in context: The influence of culture and self-construal orientation,” Journal of Nonverbal Behavior, vol. 40, no. 1, pp. 55– 64, 2016.

Wikipedia. (March 27th, 2019). “Robert Plutchik” from: https://es.wikipedia.org/wiki/Robert_Plutchik

W. -L. Zheng, W. Liu, Y. Lu, B. -L. Lu and A. Cichocki, "EmotionMeter: A Multimodal Framework for Recognizing Human Emotions," in IEEE Transactions on Cybernetics, vol. 49, no. 3, pp. 1110-1122, March 2019

D. Nguyen, K. Nguyen, S. Sridharan, A. Ghasemi, D. Dean, and C. Fookes, "Deep spatiotemporal features for multimodal emotion recognition," in Applications of Computer Vision (WACV), 2017 IEEE Winter Conference on, pages 1215–1223. IEEE, 2017

P. Tzirakis, G. Trigeorgis, M. A. Nicolaou, B. W. Schuller and S. Zafeiriou, "End-to-End Multimodal Emotion Recognition Using Deep Neural Networks," in IEEE Journal of Selected Topics in Signal Processing, vol. 11, no. 8, pp. 1301-1309, Dec. 2017,

Zhang, Xiaowei, Jinyong Liu, JianShen, Shaojie Li, KechenHou, Bin Hu, Jin Gao, and Tong Zhang. "Emotion recognition from multimodal physiological signals using a regularized deep fusion of kernel machine." IEEE transactions on cybernetics (2020).

B. Chen, Q. Cao, M. Hou, Z. Zhang, G. Lu and D. Zhang, "Multimodal Emotion Recognition With Temporal and Semantic Consistency," in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 29, pp. 3592-3603, 2021,

Y. Cimtay, E. Ekmekcioglu and S. Caglar-Ozhan, "Cross-Subject Multimodal Emotion Recognition Based on Hybrid Fusion," in IEEE Access, vol. 8, pp. 168865-168878, 2020

Zhou, Hengshun, Jun Du, Yuanyuan Zhang, Qing Wang, Qing-Feng Liu, and Chin-Hui Lee. "Information fusion in attention networks using adaptive and multi-level factorized bilinear pooling for audio-visual emotion recognition." IEEE/ACM Transactions on Audio, Speech, and Language Processing 29 (2021)

Wu, Xun, Wei-Long Zheng, and Bao-Liang Lu. "Investigating EEG-based functional connectivity patterns for multimodal emotion recognition." arXiv preprint arXiv:2004.01973 (2020).

Dai, Wenliang, Samuel Cahyawijaya, Yejin Bang, and Pascale Fung. "Weakly supervised Multi-task Learning for Multimodal Affect Recognition." arXiv preprint arXiv:2104.11560 (2021).

Lee, Sanghyun, David K. Han, and HanseokKo. "Multimodal Emotion Recognition Fusion Analysis Adapting BERT With Heterogeneous Feature Unification." IEEE Access 9 (2021)

Noroozi, Fatemeh, Marina Marjanovic, Angelina Njegus, Sergio Escalera, and GholamrezaAnbarjafari. "Audio-visual emotion recognition in video clips." IEEE Transactions on Affective Computing 10, no. 1 (2017): 60-75

K. Zhang, Y. Li, J. Wang, Z. Wang and X. Li, "Feature Fusion for Multimodal Emotion Recognition Based on Deep Canonical Correlation Analysis," in IEEE Signal Processing Letters, vol. 28, pp. 1898-1902, 2021

Li, Mi, HongpeiXu, Xingwang Liu, and Shengfu Lu. "Emotion recognition from multichannel EEG signals using K-nearest neighbor classification." Technology and health care 26, no. S1 (2018): 509-519

L. Cai, J. Dong and M. Wei, "Multi-Modal Emotion Recognition From Speech and Facial Expression Based on Deep Learning," 2020 Chinese Automation Congress (CAC), 2020, pp. 5726-5729

https://bcmi.sjtu.edu.cn/~seed/seed-iv.html

O. Martin, I. Kotsia, B. Macq, and I. Pitas. The eNTERFACE’05 audio-visual emotion database. In Proceedings of the 22Nd International Conference on Data Engineering Workshops, ICDEW ’06, pages 8–, Washington, DC, USA, 2006. IEEE Computer Society

S. Koelstra et al., “DEAP: A database for emotion analysis; using physiological signals,” IEEE Trans. Affect. Comput., vol. 3, no. 1, pp. 18–31, Jan.–Mar. 2012

Prof. Shweta Jain. (2017). Design and Analysis of Low Power Hybrid Braun Multiplier using Ladner Fischer Adder. International Journal of New Practices in Management and Engineering, 6(03), 07 - 12. https://doi.org/10.17762/ijnpme.v6i03.59

M. K. Abadi, R. Subramanian, S. M. Kia, P. Avesani, I. Patras, and N. Sebe, “DECAF: MEG-based multimodal database for decoding affective physiological responses,” IEEE Trans. Affect. Comput., vol. 6, no. 3, pp. 209–222, Jul.–Sep. 2015

LUMED-2 Dataset. [Online]. Available: https:// figshare.com/articles/dataset/Loughborough_University_Multimodal_ Emotion_Dataset_-_2/12644033

http://multicomp.cs.cmu.edu/resources/cmu-mosi-dataset/

Buitelaar, Paul, Ian D. Wood, SapnaNegi, MihaelArcan, John P. McCrae, Andrejs Abele, Cecile Robin et al. "Mixedemotions: An open-source toolbox for multimodal emotion analysis." IEEE Transactions on Multimedia 20, no. 9 (2018): 2454-2465.

Nath, Debarshi, Mrigank Singh, DivyashikhaSethia, DikshaKalra, and S. Indu. "An efficient approach to EEG-based emotion recognition using LSTM network."In 2020 16th IEEE international colloquium on signal processing & its applications (CSPA), pp. 88-92.IEEE, 2020.

Guo, Kairui, Henry Candra, Hairong Yu, Huiqi Li, Hung T. Nguyen, and Steven W. Su. "EEG-based emotion classification using innovative features and combined SVM and HMM classifier."In 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 489-492.IEEE, 2017.

Omondi, P., Rosenberg, D., Almeida, G., Soo-min, K., & Kato, Y. A Comparative Analysis of Deep Learning Models for Image Classification. Kuwait Journal of Machine Learning, 1(3). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/128

Pandey, Pallavi, and K. R. Seeja. "Emotional state recognition with eeg signals using subject independent approach." In Data Science and Big Data Analytics, pp. 117-1 Springer, Singapore, 2019.

W. Zheng, J. Zhu and B. Lu, "Identifying Stable Patterns over Time for Emotion Recognition from EEG," in IEEE Transactions on Affective Computing, vol. 10, no. 3, pp. 417-429, 1 July-Sept. 2019, doi: 10.1109/TAFFC.2017.2712143.

Appriou, Aurélien, AndrzejCichocki, and Fabien Lotte. "Modern machine-learning algorithms: for classifying cognitive and affective states from electroencephalography signals." IEEE Systems, Man, and Cybernetics Magazine 6, no. 3 (2020): 29-38.

H. Chanyal, R. K. . Yadav, and D. K. J. Saini, “Classification of Medicinal Plants Leaves Using Deep Learning Technique: A Review”, International Journal of Intelligent Systems and Applications in Engineering, vol. 10, no. 4, pp. 78–87, Dec. 2022

Sharma, P. ., R. K. Yadav, and D. J. B.Saini. “A Survey on the State of Art Approaches for Disease Detection in Plants”. International Journal on Recent and Innovation Trends in Computing and Communication, vol. 10, no. 11, Nov. 2022, pp. 14-21, doi:10.17762/ijritcc.v10i11.5774.

Oktavia, Nur Yusuf, Adhi Dharma Wibawa, EviSentiana Pane, and MauridhiHeryPurnomo. "Human emotion classification based on EEG signals using Naïve bayes method." In 2019 International Seminar on Application for Technology of Information and Communication (iSemantic), pp. 319-324. IEEE, 2019